Hitachi Streaming Data Platform

Product Overview

MK-93HSDP003-04

© 2014 , 2016 Hitachi, Ltd. All rights reserved.

No part of this publication may be reproduced or transmitted in any form or by any means, electronic

or mechanical, including photocopying and recording, or stored in a database or retrieval system for

any purpose without the express written permission of Hitachi, Ltd.

Hitachi , Ltd., reserves the right to make changes to this document at any time without notice and

assumes no responsibility for its use. This document contains the most current information available

at the time of publication. When new or revised information becomes available, this entire document

will be updated and distributed to all registered users.

Some of the features described in this document might not be currently available. Refer to the most

recent product announcement for information about feature and product availability, or contact

Hitachi, Ltd., at

https://support.hds.com/en_us/contact-us.html.

Notice:Hitachi , Ltd. products and services can be ordered only under the terms and conditions of the

applicable Hitachi Data Systems Corporation agreements. The use of Hitachi , Ltd., products is

governed by the terms of your agreements with Hitachi Data Systems Corporation.

By using this software, you agree that you are responsible for:

1. Acquiring the relevant consents as may be required under local privacy laws or otherwise from

employees and other individuals to access relevant data; and

2. Verifying that data continues to be held, retrieved, deleted, or otherwise processed in

accordance with relevant laws.

Hitachi is a registered trademark of Hitachi, Ltd., in the United States and other countries. Hitachi

Data Systems is a registered trademark and service mark of Hitachi, Ltd., in the United States and

other countries.

Archivas, BlueArc, Essential NAS Platform, HiCommand, Hi-Track, ShadowImage, Tagmaserve,

Tagmasoft, Tagmasolve, Tagmastore, TrueCopy, Universal Star Network, and Universal Storage

Platform are registered trademarks of Hitachi Data Systems Corporation.

AIX, AS/400, DB2, Domino, DS6000, DS8000, Enterprise Storage Server, ESCON, FICON, FlashCopy,

IBM, Lotus, MVS, OS/390, RS/6000, S/390, System z9, System z10, Tivoli, VM/ESA, z/OS, z9, z10,

zSeries, z/VM, and z/VSE are registered trademarks and DS6000, MVS, and z10 are trademarks of

International Business Machines Corporation.

Microsoft is either a registered trademark or a trademark of Microsoft Corporation in the United States

and/or other countries.

Linux(R) is the registered trademark of Linus Torvalds in the U.S. and other countries.

Oracle and Java are registered trademarks of Oracle and/or its affiliates.

Red Hat is a trademark or a registered trademark of Red Hat Inc. in the United States and other

countries.

SL, RTView, SL Corporation, and the SL logo are trademarks or registered trademarks of Sherrill-

Lubinski Corporation in the United States and other countries.

SUSE is a registered trademark or a trademark of SUSE LLC in the United States and other countries.

RSA and BSAFE are either registered trademarks or trademarks of EMC Corporation in the United

States and/or other countries.

Windows is either a registered trademark or a trademark of Microsoft Corporation in the United States

and/or other countries.

All other trademarks, service marks, and company names in this document or website are properties

of their respective owners.

Microsoft product screen shots are reprinted with permission from Microsoft Corporation.

Notice on Export Controls. The technical data and technology inherent in this Document may be

subject to U.S. export control laws, including the U.S. Export Administration Act and its associated

regulations, and may be subject to export or import regulations in other countries. Reader agrees to

comply strictly with all such regulations and acknowledges that Reader has the responsibility to obtain

licenses to export, re-export, or import the Document and any Compliant Products.

Third-party copyright notices

Hitachi Streaming Data Platform includes RSA BSAFE(R) Cryptographic software of EMC Corporation.

Portions of this software were developed at the National Center for Supercomputing Applications

(NCSA) at the University of Illinois at Urbana-Champaign.

2

Hitachi Streaming Data Platform

Regular expression support is provided by the PCRE library package, which is open source software, written by

Philip Hazel, and copyright by the University of Cambridge, England. The original software is available from

ftp://ftp.csx.cam.ac.uk/pub/software/programming/pcre/

This product includes software developed by Andy Clark.

This product includes software developed by Ben Laurie for use in the Apache-SSL HTTP server project.

This product includes software developed by Daisuke Okajima and Kohsuke Kawaguchi (http://

relaxngcc.sf.net/).

This product includes software developed by IAIK of Graz University of Technology.

This product includes software developed by Ralf S. Engelschall <[email protected]> for use in the mod_ssl

project (http://www.modssl.org/).

This product includes software developed by the Apache Software Foundation (http://www.apache.org/).

This product includes software developed by the Java Apache Project for use in the Apache JServ servlet engine

project (http://java.apache.org/).

This product includes software developed by the University of California, Berkeley and its contributors.

This software contains code derived from the RSA Data Security Inc. MD5 Message-Digest Algorithm, including

various modifications by Spyglass Inc., Carnegie Mellon University, and Bell Communications Research, Inc

(Bellcore).

Java is a registered trademark of Oracle and/or its affiliates.

Export of technical data contained in this document may require an export license from the United States

government and/or the government of Japan. Contact the Hitachi Data Systems Legal Department for any

export compliance questions.

3

Hitachi Streaming Data Platform

4

Hitachi Streaming Data Platform

Contents

Preface................................................................................................. 9

1 What is Streaming Data Platform?.........................................................13

A data processing system that analyzes the "right now"............................................14

Streaming Data Platform features............................................................................18

High-speed processing of large sets of time-sequenced data................................18

Summary analysis scenario definitions that require no programming.................... 19

2 Hardware components......................................................................... 21

System components............................................................................................... 22

Components of Streaming Data Platform and Streaming Data Platform software

development kit..................................................................................................... 24

SDP servers........................................................................................................... 26

3 Software components.......................................................................... 29

Components used in stream data processing............................................................30

Stream data..................................................................................................... 30

Input and output stream queues........................................................................31

Tuple............................................................................................................... 31

Query.............................................................................................................. 32

Query group.....................................................................................................34

Window........................................................................................................... 34

Stream data processing engine..........................................................................34

Using CQL to process stream data...........................................................................35

Using definition CQL to define streams and queries............................................. 35

Using data manipulation CQL to specify operations on stream data...................... 35

C External Definition Function............................................................................36

Coordinator groups................................................................................................ 36

SDP broker and SDP coordinator............................................................................. 39

SDP manager.........................................................................................................41

Log notifications............................................................................................... 42

Restart feature................................................................................................. 43

5

Hitachi Streaming Data Platform

4 Data processing...................................................................................47

Filtering records..................................................................................................... 48

Extracting records.................................................................................................. 50

5 Internal adapters................................................................................. 53

Internal input adapters...........................................................................................54

TCP data input adaptor...........................................................................................54

Overview of the TCP data input adaptor............................................................. 54

Prerequisites for using the TCP input adaptor..................................................... 55

Input adaptor configuration of the TCP data input adaptor...................................55

User program that acts as data senders............................................................. 56

TCP data input connector.................................................................................. 56

Number of connections................................................................................56

TCP data format..........................................................................................57

Byte order of data....................................................................................... 60

Restart reception of TCP connection............................................................. 60

Setting for using the TCP data input adaptor................................................. 60

Comparison of supported functions.................................................................... 61

Inputting files........................................................................................................ 62

Inputting HTTP packets.......................................................................................... 63

Outputting to the dashboard...................................................................................63

Cascading adaptor..................................................................................................64

Cascading adaptor processing overview..............................................................66

Communication method.................................................................................... 67

Features...........................................................................................................68

Connection details............................................................................................ 73

Time synchronization settings............................................................................73

Internal output adapters.........................................................................................76

SNMP adaptor........................................................................................................77

SMTP adaptor........................................................................................................ 77

Distributed send connector..................................................................................... 77

Auto-generated adapters........................................................................................ 77

6 External adapters.................................................................................81

External input adapters...........................................................................................82

External output adapters........................................................................................ 82

External adapter library.......................................................................................... 83

Workflow for creating external input adapters.....................................................83

Workflow for creating external output adapters...................................................85

Creating callbacks.............................................................................................86

Connecting to parallel-processing SDP servers..........................................................87

Custom dispatchers................................................................................................87

Rules for creating class files...............................................................................88

Examples of implementing dispatch methods......................................................89

Heartbeat transmission...........................................................................................90

Troubleshooting..................................................................................................... 90

7 RTView Custom Data Adapter............................................................... 91

Setting up the RTView Custom Data Adapter............................................................92

6

Hitachi Streaming Data Platform

Environment setup................................................................................................. 92

Editing the system definition file..............................................................................93

Environment variable settings................................................................................. 95

Data connection settings.........................................................................................95

Uninstallation.........................................................................................................98

File list.................................................................................................................. 98

Operating the RTView Custom Data Adapter............................................................ 98

Types of operations...........................................................................................98

Operation procedure......................................................................................... 99

Starting the RTView Custom Data Adapter..........................................................99

Stopping the RTView Custom Data Adapter.......................................................100

8 Scale-up, scale-out, and data-parallel configurations.............................103

Data-parallel configurations...................................................................................104

Scale-up configuration.....................................................................................104

Scale-out configuration....................................................................................106

Data-parallel settings............................................................................................107

9 Data replication................................................................................. 109

Examples of using data replication.........................................................................110

Data-replication setup...........................................................................................111

10 Setting parameter values in definition files........................................... 113

Relationship between parameters files and definition files........................................114

Examples of setting parameter values in query-definition files and query-group

properties files..................................................................................................... 116

Adapter schema automatic resolution.................................................................... 117

11 Logger.............................................................................................. 123

Log-file generation............................................................................................... 124

Glossary................................................................................................1

7

Hitachi Streaming Data Platform

8

Hitachi Streaming Data Platform

Preface

This manual provides an overview and a basic understanding of Hitachi

Streaming Data Platform (Streaming Data Platform). It is intended to provide

an overview of the features and system configurations of Streaming Data

Platform, and to give you the basic knowledge needed to set up and operate

such a system.

This preface includes the following information:

Intended audience

This document is intended for solution developers and integration developers.

Product version

This document revision applies to Streaming Data Platform version 3.0 or

later.

Release notes

Read the release notes before installing and using this product. They may

contain requirements or restrictions that are not fully described in this

document or updates or corrections to this document. The latest release

notes are available on Hitachi Data Systems Support Connect:

https://

support.hds.com/en_us/documents.html.

Referenced documents

Hitachi Streaming Data Platform documents:

• Hitachi Streaming Data Platform Getting Started Guide, MK-93HSDP006

• Hitachi Streaming Data Platform Setup and Configuration Guide,

MK-93HSDP000

• Hitachi Streaming Data Platform Application Development Guide,

MK-93HSDP001

• Hitachi Streaming Data Platform Messages, MK-93HSDP002

Preface 9

Hitachi Streaming Data Platform

Hitachi Data Systems Portal, http://portal.hds.com

Document conventions

This document uses the following terminology conventions:

Abbreviation Full name or meaning

HSDP Hitachi Streaming Data Platform

Streaming Data

Platform

HSDP software

development kit

Hitachi Streaming Data Platform software development kit

Streaming Data

Platform software

development kit

Java Java

™

JavaVM Java

™

Virtual Machine

Linux

• Red Hat Enterprise Linux

®

• SUSE Linux Enterprise Server

This document uses the following typographic conventions:

Convention

Description

Regular text bold In text: keyboard key, parameter name, property name, hardware labels,

hardware button, hardware switch

In a procedure: user interface item

Italic Variable, emphasis, reference to document title, called-out term

Screen text

Command name and option, drive name, file name, folder name, directory

name, code, file content, system and application output, user input

< > angled brackets Variable (used when italic is not enough to identify variable)

[ ] square brackets Optional value

{ } braces Required or expected value

| vertical bar Choice between two or more options or arguments.

... The item preceding this symbol can be repeated as needed.

This document uses the following icons to draw attention to information:

Icon

Label Description

Note Calls attention to important or additional information.

10 Preface

Hitachi Streaming Data Platform

Icon Label Description

Tip Provides helpful information, guidelines, or suggestions for performing

tasks more effectively.

Caution Warns the user of adverse conditions or consequences (for example,

disruptive operations).

Warning Warns the user of severe conditions or consequences (for example,

destructive operations).

Getting help

Hitachi Data Systems Support Connect is the destination for technical support

of products and solutions sold by Hitachi Data Systems. To contact technical

support, log on to Hitachi Data Systems Support Connect for contact

information:

https://support.hds.com/en_us/contact-us.html.

Hitachi Data Systems Community is a global online community for HDS

customers, partners, independent software vendors, employees, and

prospects. It is the destination to get answers, discover insights, and make

connections. Join the conversation today! Go to

community.hds.com,

register, and complete your profile.

Comments

Please send us your comments on this document to

Include the document title and number, including the revision level (for

example, -07), and refer to specific sections and paragraphs whenever

possible. All comments become the property of Hitachi Data Systems

Corporation.

Thank you!

Preface 11

Hitachi Streaming Data Platform

12 Preface

Hitachi Streaming Data Platform

1

What is Streaming Data Platform?

Streaming Data Platform is a product that enables you to process stream

data; that is, it allows you to analyze in real-time large sets of data as they

are being created. This chapter provides an overview of Streaming Data

Platform and explains its features. This chapter also gives an example of

adding Streaming Data Platform to your current workflow, and it describes

the system configuration needed to set up and run Streaming Data Platform.

□

A data processing system that analyzes the "right now"

□

Streaming Data Platform features

What is Streaming Data Platform? 13

Hitachi Streaming Data Platform

A data processing system that analyzes the "right now"

Our societal infrastructure has been transformed by the massive amounts of

data being packed into our mobile telephones, IC cards, home appliances,

and other electronic devices. As a result, the amount of data handled by data

processing systems continues to grow daily. The ability to quickly summarize

and analyze this data can provide us with valuable new insights. To be useful,

any real-time data processing system must have the ability to create new

value from the massive amounts of data that is being created every second.

Streaming Data Platform responds to this challenge by giving you the ability

to perform stream data processing. Stream data processing gives you real-

time summary analysis of the large quantities of time-sequenced data that is

always being generated, as soon as the data is generated.

For example, think how obtaining real-time summary information on what

was searched for from peoples PCs and mobile phones could increase your

product sales opportunities. If a particular product becomes a hot topic on

product discussion sites, you expect the demand for it to increase, so more

people would tend to search for that product on the various search sites. You

can identify such products by using stream data processing to analyze the

number of searches in real-time and provide summary results. This

information allows retail outlets to increase their orders for the product

before the demand hits, and for the manufacturer to quickly ramp up

production of the product.

On the IT systems side, demand for higher operating efficiencies and lower

costs continues to grow. At the same time, the increasing use of virtualization

and cloud computing results in ever larger and more complex systems,

making it even more difficult for IT to get a good overview of their system's

state of operation. This means that it often takes too long to detect and

resolve problems when they occur. Now, by using stream data processing to

monitor the operating state of the system in real-time, a problem can be

quickly dealt with as soon as it occurs. Moreover, by analyzing trends and

correlations in the information about the system's operations, warning signs

can be detected, which can be used to prevent errors from ever occurring.

Adding Streaming Data Platform to your data processing system gives you a

tool that is designed for processing these large volumes of data.

The following figure provides an overview of a configuration that uses

Streaming Data Platform to implement stream data processing.

14 What is Streaming Data Platform?

Hitachi Streaming Data Platform

Figure 1 Overview of a stream data processing configuration that uses

Streaming Data Platform

Introducing Streaming Data Platform into your stream data processing

system allows you to perform summary analysis of data as it is being

created.

For example, by using a stream data processing system to monitor system

operations, you can summarize and analyze log files output by a server and

HTTP packets sent over a network. These results can then be outputted to a

file, allowing you to monitor your system's operations in real-time. In this

way, you can quickly resolve system problems as they occur, improving

operation and maintenance efficiencies. You can also store the processing

results in a file, allowing you to use other applications to further review or

process the results.

To give you a better idea of how stream data processing carries out real-time

processing, stream data processing is compared to conventional stored data

processing in the following example.

Figure 2 Stored data processing on page 16 shows conventional stored

data processing.

What is Streaming Data Platform? 15

Hitachi Streaming Data Platform

Figure 2 Stored data processing

Data processed using stored data begins by storing the data sequentially in a

database as it occurs. Processing is not actually performed until a user issues

a query for the data stored in the database, and summary analysis results

are returned. Because data that is already stored in a database is searched

when the query is received, there is a time lag between the time the data is

collected and the time the data summary analysis results are produced. In

the figure, processing of data that was collected at 09:00:00 is performed by

a query issued at 09:05:00, obviously lagging behind the time the data was

collected.

Figure 3 Stream data processing on page 17 shows stream data

processing.

16 What is Streaming Data Platform?

Hitachi Streaming Data Platform

Figure 3 Stream data processing

With stream data processing, you pre-load a query (summary analysis

scenario) that will perform incremental data analysis, thus minimizing the

amount of computing that is required. Moreover, because data analysis is

triggered by the data being input, there is no time lag between it and the

time the data is collected, providing you with real-time data summary

analysis. This kind of stream data processing, in which processing is triggered

by the input data itself, is a superior approach for data that is generated

sequentially.

Therefore, the ability to perform stream data processing that you gain by

integrating Streaming Data Platform into your system allows you to get a

real-time summary and analysis of the data.

What is Streaming Data Platform? 17

Hitachi Streaming Data Platform

Streaming Data Platform features

Streaming Data Platform has the following features:

• High-speed processing of large sets of time-sequenced data

• Summary analysis scenario definitions that require no programming

The following subsections explain these features.

High-speed processing of large sets of time-sequenced data

Streaming Data Platform uses both in-memory processing and incremental

computational processing, which allows it to quickly process large sets of

time-sequenced data.

In-memory processing

With in-memory processing, data is processed while it is still in memory, thus

eliminating unnecessary disk access.

When processing large data sets, the time required to perform disk I/O can

be significant. By processing data while it is still in memory, Streaming Data

Platform avoids excess disk I/O, enabling data to be processed faster.

Incremental computational processing

With incremental computational processing, a pre-loaded query is processed

iteratively when triggered by the input data, and the processing results are

available for the next iteration. This means that the next set of computations

does not need to process all of the target data elements; only those elements

that have changed need to be processed.

The following figure shows incremental computation on stream data as

performed by Streaming Data Platform.

18 What is Streaming Data Platform?

Hitachi Streaming Data Platform

Figure 4 Incremental computation performed on stream data

As shown in the figure, when the first stream data element arrives,

Streaming Data Platform performs computational process 1. When the next

stream data element arrives, computational process 2 simply removes data

element 3 from the process range and adds data element 7 to the process

range, building on the results of computational process 1. This minimizes the

total processing required, thus enabling the data to be processed faster.

Summary analysis scenario definitions that require no programming

The actions performed in stream data processing are defined by queries that

are called summary analysis scenarios. Definitions for these summary

analysis scenarios are written in a language called CQL, which is very similar

to SQL, the standard language used to manipulate databases. This means

that you do not need to create a custom analysis application to create

summary analysis scenarios. Summary analysis scenarios can also be

modified simply by changing the definition files written in CQL.

Stream data processing actions written in CQL are called queries. In a single

summary analysis scenario, multiple queries can be coded.

For example, the following figure shows a summary analysis scenario written

in CQL for a temperature monitoring system that has multiple observation

sites, each with an assigned ID. The purpose of the query is to summarize

and analyze all of the below freezing point data found in the observed data

set.

What is Streaming Data Platform? 19

Hitachi Streaming Data Platform

Figure 5 Example of using CQL to write a summary analysis scenario

CQL is a general-purpose query language that can be used to specify a wide

range of processing. By combining multiple queries, you can define summary

analysis scenarios to handle a variety of operations.

20 What is Streaming Data Platform?

Hitachi Streaming Data Platform

2

Hardware components

This chapter provides information about the details of system components,

components of Streaming Data Platform and Streaming Data Platform

software development kit, and SDP servers.

□

System components

□

Components of Streaming Data Platform and Streaming Data Platform

software development kit

□

SDP servers

Hardware components 21

Hitachi Streaming Data Platform

System components

Hitachi Streaming Data Platform offers real-time processing of chronological

data (stream data) that is generated sequentially (in-memory). The stream

data is generated based on a user-defined (through CQL) analysis scenario

through CQL. The structure and components of SDP systems are

development server, data-transfer server, data-analysis server, and

dashboard server.

Example of an SDP system

Description

The components of Streaming Data Platform are as follows.

22 Hardware components

Hitachi Streaming Data Platform

Table 1 SDP system components

S. No. Component Description

1 Development server

• Streaming Data Platform and Streaming Data Platform

software development kit are installed on the

development server.

• This server provides a development environment for

analysis scenarios. It also provides a development

environment for adapters that send and receive the

stream data that is used by the SDP system.

• A system developer can use the API and tools provided

with Streaming Data Platform software development kit

to develop and test analysis scenarios and adapters.

2 Data-transfer server

• Streaming Data Platform is installed on the data-

transfer server.

• This server outputs stream data from a data source to

the data-analysis server.

• The output formats that are supported include text files

and HTTP packets.

• A system architect enables the system to support a

wide range of data types by applying various adapters,

which are developed through the API of Streaming

Data Platform software development kit, to the data-

transfer server.

3 Data-analysis server

• Streaming Data Platform is installed on the data-

analysis server.

• This server processes the stream data that is received

from a data-transfer server (based on a user-

developed analysis scenarios) to output the processed

stream data.

• The output formats that are supported include text

files, SNMP traps, and email.

• A data-analysis server is also able to send processed

stream data to other data-analysis servers and

dashboard servers. Therefore, a system architect can

build a scalable system by connecting multiple data-

analysis servers.

4 Dashboard server RTView of SL Corp. and HSDP are installed. HSDP inputs

stream data from the data analysis server and outputs to

the dashboard on the Viewer client of RTView. The user

can build a system that collects data from data sources

and analyzes in real time by HSDP and visualizes and

monitors the analysis results on the dashboard by RTView.

Hardware components 23

Hitachi Streaming Data Platform

Components of Streaming Data Platform and Streaming

Data Platform software development kit

The components of SDP systems are as follows: SDP servers, stream-data

processing engine, internal adapters, external adapters, SDP brokers, SDP

coordinators, SDP managers, custom data adapters, CQL debug tool, and

adapter library.

SDP and SDP SDK components in a development system

SDP components in a business system

24 Hardware components

Hitachi Streaming Data Platform

Description

The components and features of Streaming Data Platform and Streaming

Data Platform software development kit in SDP systems are as follows.

Table 2 Streaming Data Platform components and features

S.No.

Component Feature description

1 SDP server

• The SDP server receives, processes, and outputs

stream data.

• This server comprises the stream-data processing

engine and internal adapters, which are used to input

and output stream data.

2 Stream-data processing

engine

The stream-data processing engine processes stream data

based on analysis scenarios that are defined (through

CQL) by the user.

3 Internal adapter The internal adapters include the internal input adapter

and internal output adapter.

4 External adapter The external adapters include the external input adapter

and external output adapter.

Hardware components 25

Hitachi Streaming Data Platform

S.No. Component Feature description

5 SDP broker

• An SDP broker gets the I/O address of the stream data

from an SDP coordinator.

• This address is sent by the SDP broker to the SDP

servers and external adapters.

• The internal output adapters of the SDP servers and

external adapters connect to other SDP servers and

external adapters, based on the I/O address, to send

and receive stream data.

6 SDP coordinator

• An SDP coordinator manages the operation information

of the SDP servers such as I/O addresses for stream

data.

• The SDP coordinator can also form a cluster

(coordinator group) with the SDP coordinators of other

hosts.

• The cluster will be used to multiplex the operation

information of SDP servers.

7 SDP manager

• An SDP manager controls SDP servers, the SDP broker,

and SDP coordinator.

• If any SDP server fails, then the SDP manager can

recover the SDP servers based on the operation

information of the SDP servers.

8 Custom data adapter A custom data adapter receives processed stream data

from the internal output adapter of SDP servers and

outputs it to RTView.

Table 3 Streaming Data Platform software development kit components

and features

S.No.

Component Feature description

1 CQL debugging tool The CQL debugging tool debugs analysis

scenarios. The user operates the tool to test the

analysis scenarios developed by using CQL.

2 Adapter library The adapter library consists of the API modules

and headers of the external and internal

adapters. The user can use these utilities to

develop external and internal custom adapters.

SDP servers

An SDP server name is assigned as a unique identifier for each server that is

running in a working directory. Normally, SDP servers start with 1 and when

each server is added, it is incremented by 1.

26 Hardware components

Hitachi Streaming Data Platform

Description

The details of the server name are as follows:

• The rule for naming servers is N * N, where N is an integer whose value is

greater than or equal to 1 (a sequential unique number in a working

directory).

• If an SDP server is terminated normally, then its server name is released

and assigned to the next SDP server that starts.

• If an SDP server is restarted after an abnormal termination, then the

server name that was assigned earlier will be reassigned.

• Server names can be verified using the hsdpstatusshow command.

For more information about the options of the hsdpstatusshow command,

see Hitachi Streaming Data Platform Setup and Configuration Guide

Hardware components 27

Hitachi Streaming Data Platform

28 Hardware components

Hitachi Streaming Data Platform

3

Software components

This chapter provides information about the following components that are

used for processing streaming data: tuples, queries, query groups, windows,

and stream-data processing engine. Additionally, it provides information

about using CQL to process stream data, define streams and queries, and

using data-manipulation CQL to specify operations on stream data.

□

Components used in stream data processing

□

Using CQL to process stream data

□

Coordinator groups

□

SDP broker and SDP coordinator

□

SDP manager

Software components 29

Hitachi Streaming Data Platform

Components used in stream data processing

This section describes the components used in stream data processing.

The following figure shows the components used in stream data processing.

Figure 6 Components used in stream data processing

This section explains the following components shown in the figure.

1. Stream data : Large quantities of time-sequenced data that is

continuously generated.

2. Input and output stream queues : Parts of the stream data path.

3. Stream data processing engine : The part of the stream data processing

system that actually processes the stream data.

4. Tuple : A stream data element that consists of a combination of two or

more data values, one of which is a time (timestamp).

5. Query group : A summary analysis scenario used in stream data

processing. Different query groups are created for different operational

objectives.

6. Query : The action performed in stream data processing. Queries are

written in CQL.

7. Window : The target range of the stream data processing. The amount of

stream data that is included in the window is the process range. It is

defined in the query.

Stream data

Stream data refers to large quantities of time-sequenced data that is

continuously generated.

30 Software components

Hitachi Streaming Data Platform

Stream data flows based on the stream data type (STREAM) defined in CQL,

enters through the input stream queue, and is processed by the query. The

query's processing results are converted back to stream data, and then

passed to the output stream queue and output.

Input and output stream queues

The input stream queue is the path through which the input stream data is

received. The input stream queue is coded in the query using CQL statements

for reading streams.

The output stream queue is the path through which the processing results

(stream data) of the stream data processing engine are output. The output

stream queue is coded in the query using CQL statements for outputting

stream data.

The type of stream data that passes through the input stream queue is called

an input stream, and the type of stream data that passes through the output

stream queue is called an output stream.

Tuple

A tuple is a stream data element that consists of a combination of data

values and a time value (timestamp).

For example, for temperatures observed at observation sites 1 (ID: 1) and 2

(ID: 2), the following figure compares data items, which have only values,

with tuples, which combine both values and time.

Figure 7 Comparison of data items, which have only values, with tuples,

which combine both values and time

By setting a timestamp indicating the observation time to each tuple as

shown in the figure, data can be processed as stream data, rather than

handled simply as temperature information from each observation site.

There are two ways to set the tuple's timestamp: the server mode method,

where the timestamp is set based on the time the tuple arrives at the stream

Software components 31

Hitachi Streaming Data Platform

data processing engine, and the data source mode method, where the

timestamp is set at the time that the data was generated. Use the data

source mode when you want to process stream data sequentially based on

the time information in the data source, such as when you perform log

analysis.

The following subsections explain each mode.

Query

A query defines the processing that is performed on stream data. Queries are

written in a query definition file using CQL. For details about the query

definition file, see the Hitachi Streaming Data Platform Setup and

Configuration Guide.

Queries define the following three types of operations:

• Window operations, which retrieve the data to be analyzed from the

stream data

• Relation operations, which process the retrieved data

• Stream operations, which convert and output the processing results

• Stream to stream operations, which convert data from one data stream to

another

The following figures show the relationship between these operations.

Figure 8 Relationship between the operations defined by a query

32 Software components

Hitachi Streaming Data Platform

Figure 9 Stream to stream operation

A window operation retrieves stream data elements within a specific time

window. The data gathered in this process (tuple group) is called an input

relation.

A relation operation processes the data retrieved by the window operation.

The tuple group generated in this process is called an output relation.

A stream operation takes the data that was processed by the relation

operation, converts it to stream data and outputs it.

Stream to stream operations convert data from one data stream to

another by directly performing operations on the stream data without

creating a relation. In stream to stream operations, any processing can be

performed on the input stream data because there are no specific rules for

the data except that the input and output data must be stream data. To

perform processing, implement the processing logic for the stream to stream

function as a method in the class file created by a user with Java.

Interval calculations whereby data is calculated at fixed intervals (times) by

combining window operations, relational operations, and stream operations

used to be difficult. Now, interval calculations can be processed by using

stream to stream operations.

To use stream to stream operations, it is necessary to define the stream to

stream functions with CQL and create external definition functions. For details

on how to create external definition functions, see the Hitachi Streaming

Data Platform Application Development Guide.

For details about each of these operations, see

Using data manipulation CQL

to specify operations on stream data on page 35.

Stream data is processed according to the definitions in the query definition

file used by the stream data processing engine. For details about the

contents of a query definition file, see

Using CQL to process stream data on

page 35.

Software components 33

Hitachi Streaming Data Platform

Query group

A query group is a summary analysis scenario for stream data that has

already been created by the user. A query group consists of an input stream

queue (input stream), an output stream queue (output stream), and a query.

You create and load query groups to accomplish specific operations. You can

register multiple query groups.

Window

A window is a time range set for the purpose of summarizing and analyzing

stream data. It is defined in a query.

In order to summarize and analyze any data, you must clearly define a target

scope. With stream data as well, you must first decide on a fixed range, and

then process data in that range.

The following figure shows the relationship between stream data and the

window.

Figure 10 Relationship between stream data and the window

The stream data (tuples) in the range defined by the window shown in this

figure are temporarily stored in memory for processing.

A window defines the range of the stream data elements being processed,

which can be defined in terms such as time, number of tuples, and so on. For

details about specifying windows, see

Using data manipulation CQL to specify

operations on stream data on page 35.

Stream data processing engine

The stream data processing engine is the main component of Streaming Data

Platform and actually processes the stream data. The stream data processing

engine performs real-time processing of stream data sent from the input

adaptor, according to the definitions in a pre-loaded query. It then outputs

the processing results to the output adaptor.

34 Software components

Hitachi Streaming Data Platform

Using CQL to process stream data

Stream data is processed according to the instructions in the query definition

file used by the system. The query definition file uses CQL to describe the

stream data type (STREAM) and the queries. These CQL instructions are called

CQL statements.

There are two types of CQL statements used for writing query definition files:

• Definition CQL

These CQL statements are used to define streams and queries.

• Data manipulation CQL

These CQL statements are used to process the stream data.

This section describes how to use definition CQL to define streams and

queries, and how to use data manipulation CQL to perform processing on

stream data.

For additional details about CQL, see the Hitachi Streaming Data Platform

Application Development Guide.

CQL statements consist of keywords, which have preassigned meanings, and

items that you specify following a keyword. An item you specify, combined

with one or more keywords, is called a clause. The code fragments discussed

on the following pages are all clauses. For example, REGISTER STREAM

stream-name, consisting of the keywords REGISTER STREAM and the user-

specified item stream-name, is known as a REGISTER STREAM clause.

Using definition CQL to define streams and queries

CQL statements that are used to define streams and queries are called

definition CQL. There are two types of definition CQL.

• REGISTER STREAM clauses

• REGISTER QUERY clauses

The following subsections explain how to specify each of these clauses.

Using data manipulation CQL to specify operations on stream data

There are three types of data manipulation CQL operations:

• Window operations

• Relation operations

• Stream operations

• Stream to stream operations

Software components 35

Hitachi Streaming Data Platform

C External Definition Function

By using the External Definition Function in the C language, the external

definition stream to stream operation of the acceleration CQL engine can be

used.

To develop the C External Definition Function, you need to include the

headers that the library for the C EDF provides. The library for C EDF

provides structures and functions.

Coordinator groups

SDP coordinators can share information about the connection destinations of

query groups and streams across multiple hosts. SDP coordinators that share

such information are set in a coordinator group using the -chosts option of

the hsdpsetup command. For more details, see the Hitachi Streaming Data

Platform Setup and Configuration Guide. The SDP broker can find the streams

on all the hosts that use the same coordinator group by using the data that is

shared by a coordinator group. Therefore, external adapters and cascading

adapters can use the SDP broker on a host to connect to the streams that are

on multiple hosts. Additionally, if you set the SDP broker of a different host

that uses the same coordinator group as the connection destination, then the

same streams can be connected.

Coordinator group

Information multiplexing

36 Software components

Hitachi Streaming Data Platform

Description

A coordinator group that excludes the local host can be set. If the local host

is not specified in a coordinator group, then the SDP coordinator will not be

started. The SDP broker will use the SDP coordinator of another host to store

and find the information about the local host. In this case, the SDP broker,

which is available on the host that uses the same coordinator group, can

connect to the streams of the same host. Additionally, if the SDP broker uses

the SDP coordinator of another host, then a maximum of 1,024 SDP brokers

(including those that exist on the host of the reference destination SDP

coordinator) can connect to the coordinator group at the information

reference destination.

Coordinator group that does not include the local host

Software components 37

Hitachi Streaming Data Platform

You can set up data multiplicity by using the -cmulti option of the

hsdpsetup command.

When you configure a coordinator group that comprises three or more SDP

coordinators, multiple SDP coordinators can redundantly store identical

information. (You should set up data multiplicity by using the -cmulti option

of the hsdpsetup command. For more information, see the Setup and

Configuration guide.)

When data multiplicity is set to 2 or more, if the SDP coordinators fail within

a coordinator group because the number is less than the multiplicity that has

been set, then the SDP coordinators on another host can be used to continue

the operation. If the number of SDP coordinators that have failed is equal to

or greater than the multiplicity that has been set, then all the SDP

coordinators must be restarted. Additionally, if a query group was started and

running before the SDP coordinator failed, then the query group must be also

restarted.

If the coordinator group was running with two SDP coordinators, then you

can restore the coordinator group to the original state by restarting the

stopped SDP coordinators.

For more information, see Hitachi Streaming Data Platform Setup and

Configuration Guide.

38 Software components

Hitachi Streaming Data Platform

SDP broker and SDP coordinator

The SDP broker and SDP coordinator provide the functions that are used by

external adapters and cascading adapters to connect to the data-

transmission or reception-destination stream. A maximum of one SDP broker

and SDP coordinator can be run on a host. Additionally, you can use the

hsdp_broker operand in the SDP manager-definition file to specify whether

to start the process of the SDP broker. You can use the -chosts option of the

hsdpsetup command to specify whether to start the process of the SDP

coordinator. The SDP coordinator manages the locations of the SDP servers

where the streams are registered that can be connected on the host. The

SDP broker provides a function to search (from the SDP coordinator) for

information that is needed to locate the connection destination stream,

connect to it, and then pass the information to the external adapter and

cascading adapter. If a stream is re-registered to another SDP server later,

the SDP broker and SDP coordinator ensure that the operator can still run the

external adapters and cascading adapters by using the same settings.

Finding streams

Consolidating TCP ports

Software components 39

Hitachi Streaming Data Platform

Description

SDP brokers

An SDP broker obtains the I/O address of stream data from an SDP

coordinator and sends it to the SDP servers and external adapters. The

internal output adapters of the SDP servers and external adapters

connect other SDP servers and external adapters based on the address

information to send and receive stream data.

SDP brokers have the function to transfer connections through TCP

(communication established with external adapters or with cascading

adapters) to internal adapters, where data is sent to and received from

the streams on the local host.

By using this function, SDP brokers can relay connections between the

external adapters or cascading adapters and internal adapters, so that,

connections to different streams on the host can be received by using a

single port number.

40 Software components

Hitachi Streaming Data Platform

SDP coordinators

An SDP coordinator manages the operation information about the SDP

servers such as the I/O addresses of stream data. The SDP coordinator

can also form a cluster (coordinator group) with the SDP coordinators of

other hosts to multiplex the operation information of the SDP servers.

Information managed by the SDP coordinator

The query group that is registered to the SDP server is started by the

SDP broker. When the query group is deleted from the SDP server, the

corresponding registration information is deleted from the SDP broker.

When any information is registered or deleted, if a coordinator group is

set up, then the current registration information is shared immediately

by all SDP coordinators in the coordinator group. The information that is

registered to the SDP coordinator is as follows.

Table 4 Information managed by the SDP coordinator

#

Item Description

1 Host Host name or IP address of the HSDP

system where the connection destination

stream is registered

2 HSDP-working-directory Absolute path of the working directory of

the SDP server where the connection

destination stream is registered

3 Server cluster name Name of the server cluster to which the

server belongs

4 Server name Name of the SDP server

5 Query group name Name of the query group where the

connection destination stream is defined

6 Stream name Name of the connection destination stream

7 TCP connection port TCP port

8 RMI connection port RMI port

9 Stream type Stream type: Input/output

10 Timestamp mode Time stamp mode of the connection

destination stream

11 Dispatch type Property information that describes the

method for dispatching data to the

connection destination stream

12 Schema information Schema information of the connection

destination stream

SDP manager

An SDP manager controls SDP servers, an SDP broker, and an SDP

coordinator.

Software components 41

Hitachi Streaming Data Platform

Description

When an SDP server fails, the SDP manager recovers the SDP server based

on the operation information (of the SDP server) that is retained by the SDP

coordinator.

Log notifications

The log notification feature of the SDP manager is used to monitor the

processes of the various components that are available in a host. When a

process shutdown is detected, the log notification feature outputs messages

to log files.

Process monitoring

Description

The log notification feature monitors the performance of the following

components (in a host):

• SDP broker

• SDP coordinator

• SDP servers

A maximum of one SDP manager can run on a host.

42 Software components

Hitachi Streaming Data Platform

The processes of the SDP broker, SDP coordinator and SDP server

components can be started by running the hsdpmanager or hsdpstart

command.

The processes of the components are activated when the processes are

started. When the processes of the components are activated, the SDP

manager starts monitoring these processes. While monitoring , if a process

shuts down because of a failure, then the SDP manager detects the shutdown

and outputs a message to the log files of the SDP manager. The message

comprises the details about the failure and subsequent shutdown. For more

information about the log files of SDP manager, see Hitachi Streaming Data

Platform Setup and Configuration Guide.

The SDP manager does not monitor the processes of any of the components

if either of the following conditions is met:

• If the SDP manager has not been started by running the hsdpmanager

command.

• If a component has not been started by running the hsdpmanager or

hsdpstart command and the hsdpcql command.

Restart feature

The restart feature of the SDP manager provides the functionality to monitor

the processes of each component that is displayed in log notifications and

restart any processes that have shut down.

Description

When the SDP server is restarted, the query groups and internal adapters

(running on the server before the server shut down) are also restarted.

Additionally, the SDP manager also restarts its own processes that have shut

down. You can enable or disable the restart feature in the hsdp_restart

property of the SDP manager-definition file. For more information about the

SDP manager-definition file, see Hitachi Streaming Data Platform Setup and

Configuration Guide. The CPU, which is specified for the hsdp_cpu_no_list

property of the SDP manager-definition file, is assigned to the process of the

component that has been restarted.

The SDP manager does not restart the processes of a specific component if

any of the following conditions are met:

• If the SDP manager has not been started by running the hsdpmanager

command, then it does not restart any of the processes (including its own

processes) of any of the components.

• If a component has not been started by running the hsdpmanager or

hsdpstart command and the hsdpcql command, then the SDP manager

does not restart the processes of any of the components.

Software components 43

Hitachi Streaming Data Platform

• If the restart setting has been disabled, then the SDP manager does not

restart any of the processes (including its own processes) that are

displayed in the log notifications.

• If a specific operating system is specified as a prerequisite, then based on

the type of operating system, the SDP manager does not restart any of its

own components even if restart has been enabled.

Table 5 Availability of the restart feature of the SDP manager

Prerequisite operating system Versions

SDP manager can be

restarted

Red Hat Enterprise Linux 6.5 Yes

Red Hat Enterprise Linux Advanced Platform 6.6 Yes

7.1 Yes

SUSE Linux Enterprise Server 11 SP2 No

11 SP3 No

12 Yes

Note: When a process is shut down, if the restart feature is unavailable, then

the user must manually restart the process of the SDP manager by using the

hsdpmanager command.

While a component is restarting, if an inter-process connection fails, then the

SDP manager tries a restart request again. You can specify the number of

retries and the corresponding wait intervals in the hsdp_retry_times and

hsdp_retry_interval properties (of the SDP manager-definition file)

respectively. For more information about SDP manager-definition file, see

Hitachi Streaming Data Platform Setup and Configuration Guide. If a

shutdown process fails to restart even after the restart request has been run

for the specified number of times, then the SDP manager stops attempting to

restart the component and starts monitoring other components.

If the SDP coordinators meet both the following conditions, then the SDP

coordinators cannot be restarted by the SDP manager:

• Coordinator group comprises of three or more SDP coordinators

• Number of SDP coordinators equal to or greater than the specified

multiplicity have stopped

If the SDP coordinators cannot be restarted, then all the SDP coordinators

that are running within the coordinator group should be stopped by using the

hsdpmanager -stop command. After stopping all the SDP coordinators, they

have to be manually restarted by running the hsdpmanager -start

command. In this case, if a query group is running, then the stream

44 Software components

Hitachi Streaming Data Platform

information registered to the SDP coordinators is lost. Therefore, the query

group should be restarted.

Software components 45

Hitachi Streaming Data Platform

46 Software components

Hitachi Streaming Data Platform

Filtering records

To perform stream data processing only on specific records, you use a filter

as the data editing callback.

For example, if you are monitoring temperatures from a number of

observation sites and you want to summarize and analyze temperatures from

only one particular observation site, you can filter on that observation site's

ID.

Only common records can be filtered. If the input source is a file, after an

input record is extracted by the file input connector, you must use the format

conversion callback to convert it to a common record before filtering it.

When specifying the evaluation conditions you want to filter on, you can use

any of the record formats and values that are defined in the records. The

following figure shows the positioning and processing of the callback involved

in record filtering.

48 Data processing

Hitachi Streaming Data Platform

Figure 11 Positioning and processing of the callback involved in record

filtering

1. The records passed to the filter are first filtered by record format.

Only records of record format R1 meet the first condition, so only these

records are selected for processing by the next condition. Records that

do not satisfy this condition are passed to the next callback.

2. After the records are filtered by record format, they are then filtered by

record value.

This condition specifies that only those records whose ID has a value of 1

are to be passed to the next callback. In this way, only those records

that satisfy both conditions will be processed by the next callback.

Records that do not satisfy these conditions are discarded.

Data processing 49

Hitachi Streaming Data Platform

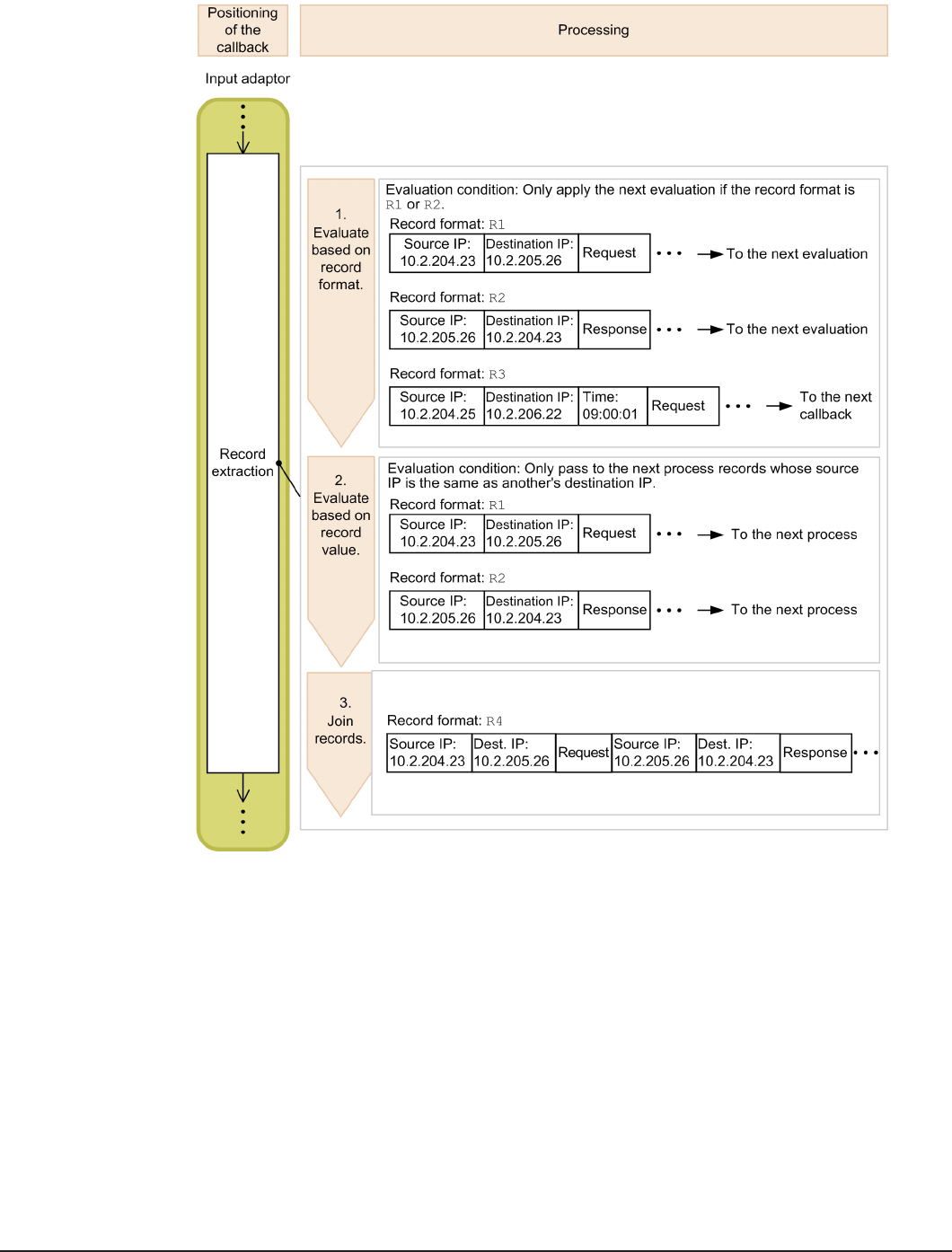

Extracting records

After you have filtered for the desired records, you use a record extraction

callback to collect all of the necessary information from the filtered records

into a single record.

For example, to summarize and analyze the responsiveness between a client

and a server, after the HTTP packet input connector is used as the input

callback, you could use a record extraction callback as the data editing

callback. You could then use the record extraction callback to join an HTTP

request and response packet pair into one record, based on the transmission

source IP addresses and the transmission destination IP addresses. This

would allow you to gain a clear understanding of response times, and to

easily summarize and analyze the resulting data.

In the following figure, after records are filtered by record format and record

value so that only the desired records are selected, the record extraction

callback joins the resulting records, and generates a new record. The

following figure shows the positioning and processing of the callback involved

in record extraction.

50 Data processing

Hitachi Streaming Data Platform

Figure 12 Positioning and processing of the callback involved in record

extraction

1. Records passed to the record extraction callback are first filtered by

record format.

Only records whose record format is R1 or R2 meet the first condition, so

only these records are selected for processing by the next condition.

Records that do not satisfy this condition are passed to the next callback.

2. After the records are filtered by record format, they are then filtered by

record value.

Data processing 51

Hitachi Streaming Data Platform

This condition specifies that records are to be passed to the next process

only if the source IP of the request matches the destination IP of the

response, and the destination IP of the request matches the source IP of

the response. This means that only those records that match this

condition are passed to the next process.

3. Records filtered by record format and record value are joined to produce

a single record.

Records joined in this step are selected for processing by the next

callback.

52 Data processing

Hitachi Streaming Data Platform

5

Internal adapters

This chapter provides information about internal adapters. The internal

adapters provided with SDP are also called internal standard adapters. The

two types of internal adapters are as follows: internal input adapters and

internal output adapters. User-developed internal adapters, also called

internal custom adapters, can be developed by using the Streaming Data

Platform software development kit APIs.

□

Internal input adapters

□

TCP data input adaptor

□

Inputting files

□

Inputting HTTP packets

□

Outputting to the dashboard

□

Cascading adaptor

□

Internal output adapters

□

SNMP adaptor

□

SMTP adaptor

□

Distributed send connector

□

Auto-generated adapters

Internal adapters 53

Hitachi Streaming Data Platform

Internal input adapters

Internal input adapters receive stream data in specific formats and send the

data to the stream-data processing engine.

Description

The formats that are supported by the internal input adapters are as follows:

• Text files

• HTTP packets

TCP data input adaptor

Overview of the TCP data input adaptor

Streaming Data Platform provides TCP-data input adapters for one of the

internal standard adapters. When a user program or cascading adapter sends

a connection request for data transmission to Streaming Data Platform, a

TCP-data input adapter receives a connection notification through the SDP

broker. The TCP-data input adapter receives data from the source program

through an established TCP connection. It converts the TCP data that has

been received into tuples and sends the tuples to the SDP servers. The TCP-

data input adapter receives data from the connection source through an

established TCP connection. It converts the TCP data, which has been

received, into tuples and sends the tuples to the SDP server.

54 Internal adapters

Hitachi Streaming Data Platform

Figure 13 Receive TCP data and send tuples

TCP data input adaptor: Sends the tuples to a Java stream in the SDP server.

Prerequisites for using the TCP input adaptor

The following are prerequisites for using this adaptor.

Input adaptor configuration of the TCP data input adaptor

The TCP data input connector must be set as an input connector of input

adaptor. The following figure and list shows the combination of callbacks in

the input data adaptor configuration. If the TCP data is sent by using an

external input adapter as a program, then the SDP broker must be running

on a host that is using a TCP-data input adapter. If you use an external input

adapter as the transmission source of TCP data, then the SDP broker must be

running on the host on which you want to use the TCP-data input adapter.

When a connection request is received from the external input adapter, the

SDP broker starts the TCP input adapter, which is required for

communication.

Internal adapters 55

Hitachi Streaming Data Platform

Figure 14 Input adaptor configuration

Table 6 List of the callback combinations

Adaptor

Type

Callback combination

Input Callback Editing Callback Sending Callback

Java TCP data input

connector

Any kind of editing

callback can be set or

omitted

Any kind of sending

callback

C - Sending callback

User program that acts as data senders

User programs that send data to the TCP data input adaptor for C must be

implemented with the external-adapter library. When the external-adapter

library is used to implement a TCP-data input adapter, the user program

specifies both the stream information and address of the SDP broker for the

host (running in the TCP input adapter) in the definition file of the external

input adapter. This enables the external input adapter to establish

communication.

TCP data input connector

This section describes details of the TCP data input connector that is

processing of the input adaptor.

Number of connections

After the TCP-data input adapter has been started, the TCP-data input

connector receives data from the data source through a TCP connection.. The

following table shows the number of connections that are established

between the user program and this adaptor for Java.

56 Internal adapters

Hitachi Streaming Data Platform

Table 7 Number of connections

Adaptor type Number of connections Output tuples

Java 1 to 16 connections (per adaptor) can

be established as indicated in

Figure 15 Number of connections (for

Java) on page 57.

Tuples that are sent to the Java

stream in the HSDP server by this

adaptor are time-sequenced data.

Figure 15 Number of connections (for Java)

TCP data format

This connector input TCP data as follows:

Figure 16 TCP data format

As shown above, TCP data consists of header data and a series of one or

more units of data. Each unit of data consists of a given number of data

items. This connector processes header data and units of data as follows:

Internal adapters 57

Hitachi Streaming Data Platform

Figure 17 Form unit data into record

The sections shown in the above figure are as follows:

1. Seek the byte size of the header data as an offset.

2. Seek the byte size of the fixed-length data as an offset.

3. Seek the byte size of the data as an offset.

4. Form the data into a record field.

5. Repeat section 3 and 4.

6. When the connector has performed the seek to the end of the unit of

data, the connector outputs the record to the next callback.

7. Repeat section 2 to 6.

The user defines each byte size of the offset and data of the target to be

formed in the adaptor composition file, and can select the data to be formed

into record fields. The details of header information are as follows.

Item

Description Size Data type

Data kind Specifies the kind of data.

0: Normal data

2 bytes

short

(Reserved) A domain reserved for future

extension.

2 bytes

short

If the TCP data input connector inputs data whose data type is variable-

length character (VARCHAR) and then forms input data into a record field, the

58 Internal adapters

Hitachi Streaming Data Platform

user program that acts as data senders has to send the data to the TCP data

input connector with the following data format:

Figure 18 Data format of the TCP data input connector

Table 8 Description of the data format

Item

Description Size Data type Value

Data length Specifies the

length of the byte

array that stores

variable-length

character data.

If this value is

more than the

size attribute

value of the TCP

data input

connector

definition in the

adaptor

configuration

definition file, the

TCP data input

connector outputs

the KFSP48916

warning message,

inputs the

variable-length

character data

from the

beginning to the

size attribute

value, and then

2

bytes

short

An integer from 0

to 32767

Internal adapters 59

Hitachi Streaming Data Platform

Item Description Size Data type Value

forms input data

into a record field.

If a value of zero

is specified, the

variable-length

character data

must be omitted.

In this case, the

TCP data input

connector forms a

null character into

a record field.

Variable-length

character data

Specifies the byte

array that stores

variable-length

character data.

The length of the

byte array must

be same as the

value specified in

the data length.

Note that the TCP

data input

connector does

not check the

value of this data

(for example, the

character code

and control

characters), and

then forms the

specified byte

array into a record

field without any

changes.

1 to 32767 bytes

varchar