RESEARCH

ON AN

AUGMENTED LAGRANGIAN

PENALTY FUNCTION

ALGORITHM

FOR

NONLINEAR PROGRAMMING

FINAL REPORT

NASA

RESEARCH GRANT

NSG

1525

April 1978 through December 1978

Principal Investigator:

Dr. Les

Frair

Department

of

IEOR

VPI

& SU

ABSTRACT

This

research

represents

an

extensive study

of the

Augmented

Lagrangian

(ALAG)

Penalty Function Algorithm

for

optimizing

nonlinear

mathematical

models.

The

mathematical models

of

interest

are

deterministic

in

nature

and

finite dimensional optimization

is

assumed.

A

detailed review

of

penalty function techniques

in

general

and the

ALAG

technique

in

particular

is

presented. Numerical experiments

are

conducted

utilizing

a

number

of

nonlinear

optimization problems

to

identify

an

efficient ALAG Penalty Function Technique

for

computer implementation.

TABLE

OF

CONTENTS

1.

INTRODUCTION

2.

REVIEW

0*F

RELATED MINIMIZATION TECHNIQUES

2.1

Penalty Function Technique

2.2

Lagrangian

Primal-Dual

Method

3.

AUGMENTED LAGRANGIAN PENALTY FUNCTION TECHNIQUE

3.1

Introduction

3.2

Review

of

Technique

for

Equality Constrained Problem

3.2.1 Equality Constrained Problem

3.2.2

Powell-Hestenes

Augmented Penalty Function

3.3

Review

of the

Technique

for a

Constrained Problem with

Equalities

and

Inequalities

3.3.1 Constrained Problem

3.3.2 Powell-Hestenes-Rockafeller Penalty Function

4.

NUMERICAL RESULTS

4.1

Introduction

4.2

Numerical Results

for

Unconstrained Algorithms

4.3

Numerical Results

for

Constrained Algorithms

APPENDIX

A.

MATHEMATICAL CONCEPTS

AND

PENALTY FUNCTION TECHNIQUES

APPENDIX

B.

COMPUTERIZED ALAG ALGORITHM

AND

APPLICATION

APPENDIX

C.

COMPUTER PROGRAM DOCUMENTATION

REFERENCES

I.

INTRODUCTION

The

current advanced stage

of

development

of the

theoretical

framework

of

-unconstrained optimization

has

served

as a

powerful force

for

unification

of the

subject which, until some years ago, consisted

of

a

collection-of

disjointed algorithms.

The

evolution

of

these

algorithms depended strongly

on

practical computation

of

solution

to

specific

problems.

The

interplay

of

theory

and

algorithms

has

made

it

possible

to

transfer theoretical progress into improved algorithms.

Powell

(P5)

has

reviewed comprehensively modern algorithms

and

the

effect

of

theoretical

work

on the

design

of

practical algorithms

for

unconstrained optimization. Murray (Mil)

has

presented

the

main-

.stream

of

developments

in

numerical methods

for

unconstrained optimization.

Much

of the

current research

has

been focused

on

understanding, comparing,

improving

and

extending

the

available numerical methods instead

of

devising totally

new

algorithmic concepts. These refinements

and

modifi-

cations

are

.not expected

to

significantly improve

the

efficiency

of

existing

algorithms (G2).

At

present

a

robust collection

of

potent

and

sophisticated general

purpose

algorithms

for

unconstrained optimization

is

available

as

high-

quality

software (G2).

These

algorithms have been tested

and

proven

to

be

efficient

and

reliable

for

solving

a

variety

of

typical test problems

and

practical problems. Successful development

of

such algorithms

for

unconstrained optimization

has

been

the

springboard

for the

more recent

success

in the

design

of

algorithms

for

constrained problems.

Availability

of

efficient numerical methods

for

solving

unconstrained optimization problems

has

motivated

the

design

of

algorithms that convert

a

constrained problem

to a

sequence

of

unconstrained problems which have

the

property

that

successive

solutions

of the

unconstrained problems converge

to the

solution

of

the

constrained problem.

This

transformation approach

has

been

systematically employed

in the

development

of

numerical algorithms

for

constrained optimization

for

more than

a

decade.

In

recent years

a

substantial

body

of

theory

has

been established

for

these transfor-

mation techniques

and

many computational algorithms have been

proposed

(B4), (Fl), (L3).

To

review briefly

the

transformation technique, consider

the

following inequality constrained

nonlinear

programming problem.

Let

(2)

f(X)

and c. (X) i =

l,2,....,m

be

real valued functions

of

class

C

'x/

i "u

on a

nonempty open

set L in an

n-dimensional Euclidean space

E

PI :

Minimize

f (£)

over

all X £ L .

Subject

to c. (X) > 0, i =

1,2,

. . . . ,m

where feasible region

F is a

nonempty compact set.

F

= {X : c. (X) > 0 i=

1,2,

,m, Xe L, L c. E

n

>

'v

j_ % = 'v

Methods

for

solving

PI via

unconstrained

minimization

have been

classified, described

and

analyzed

in

detail

by

Lodtsma (L3).

Para-

metric

transformation methods solve

PI by

reducing

the

computational

process

to .a

sequence

of

successive unconstrained minimizations

of a

compound

function defined

in

terms

of the

objective function

f

(X),

the

constraint functions

c. (X) i =

l,2,....,m

and one or

more

controlling parameters.

By

gradually removing

the

effect

of the

constraints

in the

compound function

by

controlled changes

in the

value

of

one or

more parameters

a

sequence

of

unconstrained problems

is

generated. Successive solutions

of

these unconstrained problems

converge

to a

solution

of

the. original constrained problem.

The

advantage

of

this approach lies

in the

fact

that

the

constraints need

not be

dealt with separately

and

that

efficient numerical methods

for

computing unconstrained extrema

can be

applied.

During recent years

the

parametric transformation technique

known

as the

Augmented Lagrangian

(ALAG)

Penalty Function Technique

has

gained recognition

as one of the

most effective

type

of

methods

for

•solving

constrained

minimization

problems.

In the

opinion

of

many

researchers

in

this field,

the

ALAG penalty function technique

is the

best

method available

for

solving

problems with nonlinear constraints

in the

absence

of

special structure (B4).

The

disadvantages

of the

method

are

negligible

and the

advantages

are

strong, especially

the

lack

of

numerical difficulties

and the

ease

of

using

the

unconstrained minimi-

zation routine.

The

method

has

global convergence

at an

ultimately

superlinear rate,

the

computational effort

per

minimization falls

off

rapidly,

initial starting point need

not be

feasible

and the

function

is

defined

for all

values

of the

parameters (F7).

The

ALAG penalty function technique

is a

balance between

the

classical penalty function technique

and the

Lagrangian primal-dual

method

which

are

both parametric transformation techniques.

The

design

of

this method

was

motivated

by

efforts

to

overcome

the

numerical

in-

stability

of the

penalty function technique near

the

solution (P3),

(H2)

and

attempts

to

eliminate

the

"duality gap"

in

nonconvex

programming (R6).

The

classical penalty function

technique

and the

Lagrangian p»imal-dual

method

are

briefly reviewed

and the

development

of

the

ALAG penalty function technique

by.the

merger

of the

penalty

idea

with

the

primal-dual philosophy

is

traced

in

section

2. The

ALAG penalty, function technique

is

described, reviewed

and

discussed

in

section

3. The

results

of

numerical

investigations

are

presented

in

section

4. The

symbols, mathematical terms

and

related concepts used

in

this

work

are

defined briefly

in

appendix

A. The

method

of

solving

a

nonlinear

problem using

the

ALAG penalty function technique

is

illustrated with numerical examples

in

appendix

B.

II.

REVIEW

OF

RELATED MINIMIZATION TECHNIQUES

2.1

Penalty Function Technique

The

pertalty

methods have been extensively used

in

numerical optimiza-

tion

for

more than

a

decade.

The

penalty function approach

has

been

popular,

as

evidenced

by

applications

to

practical problems (D3), because

it is

conceptually simple

and

easy

to

implement.

It

permits

a

transparent

program

structure

as it is

fully based

on

unconstrained minimization. These methods

are

applicable

to a

broad class

of

problems, even those involving noncpnvex

constraints.

The

most attractive feature

of

these methods

is the

fact

that

they

take advantage

of the

powerful unconstrained minimization methods

that

have

been developed

in

recent years.

The

penalty function technique

is a

sequential parametric transformation

method.

It is an

iterative algorithm

that

requires

the

solution

of an

unconstrained optimization problem

at

each iteration.

In

these methods

the

objective function f(X)

is

minimized using

an

unconstrained minimization

Oi

technique

while

maintaining

implicit control over

the

constraint violations

by

penalizing

the

objective function

at

points which violate

or

tend

to

violate

the

constraints.

The

solution

X* to the

constrained minimization

-v

problem

Pi is

approached from outside

the

feasible region

f and

these

methods

are

also referred

to as

exterior point methods.

The

penalty

function technique

has

been popularized

mainly

through

the

work

of

Fiacco

and

McCormick (Fl). Fiacco

and

McCormick (Fl) developed

the

Sequential

Unconstrained

Minimization

Techniques

(SUMT)

for

nonlinear programming

using penalty function

and

related concepts.

A

chronological survey

of

the

development

of the

penalty methods

and

detailed discussion

and

analysis

of

penalty

and

related methods

are

presented

in

reference

(Fl).

The

penalty function method

for PI

consists

of

sequential minimizations

of

the

form

minimize

P(X,

a), X e L £ E

n

P(X,

a) is the

penalty function with control parameter

o > 0.

This function

is

designed

to

impose

an

increasing penalty

on the

objective function

as

constraint violation

increases.

The

control parameter

a is

used effectively

to

increase

the

magnitude

of

penalty.

The

penalty function transformation

may be

represented

as

m

P(X,

a) =

f(X)

+ a S

n.(c.(X)),

a > 0

where

[1]

i\j

'V 1=1 3- 3- 'X/

n.(t)

is

defined

as the

loss function with

the

following properties.

(i)

n.(t)

is

continuous

on -°° < t <

m

(ii)

for

inequality constraint

c.(X)

> 0

1

^ -

n.

(t) -> °° as t

->-

-» and n. (t) = 0 for t > 0

(iii)

for

equality constraint

c.(X)

= 0

1

^

n.(t)

> 0 Vt,

n.(t)

= 0 for t = 0 and

n

(t) -> «

as

t

-»•

±°°

Usually

the

loss function,

n.(t),

is

chosen such that when

the

objective

(2)

function

and the

constraint functions

are of

class

C ,

P(X,

a) is

twice

Oi

differentiable.

P(X,

o) is

defined

on an

open

set L S E and

P(X,

o) -> °°

'V /V

as

constraint violation

increases.

Several different loss functions have been proposed

for use in the

penalty

function algorithm

and

these

are

discussed

by

Fiacco

and

McCormick

7

(Fl).

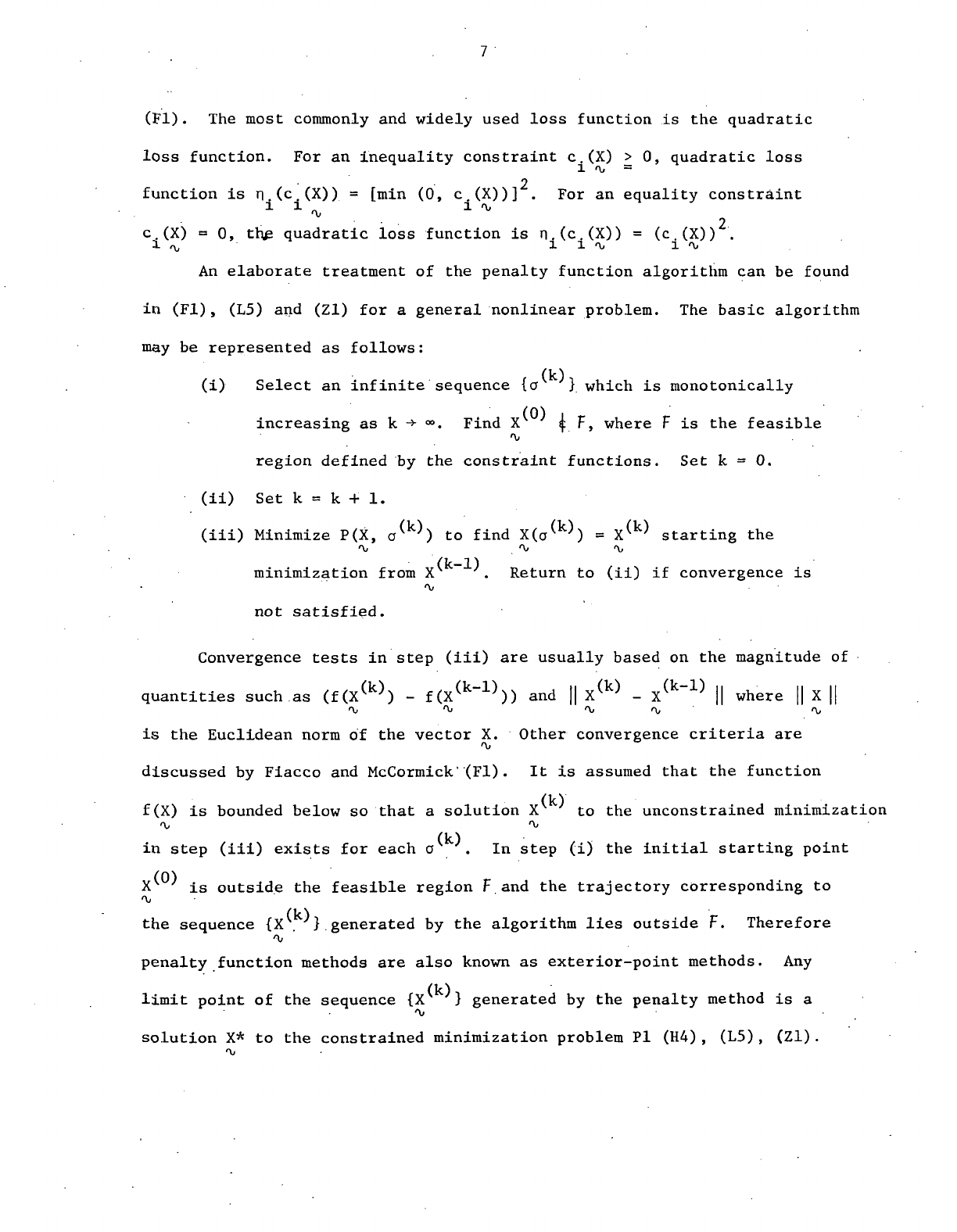

The

most commonly

and

widely used loss function

is the

quadratic

loss function.

For an

inequality constraint

c.(X)

> 0,

quadratic loss

i >\, =

2

function

is n (c

(X))

=

[min

(0, c

(X))]

. For an

equality constraint

1 i

-v

1

^

c.(X)

= 0, th£

quadratic loss function

is

n.(c.(X))

=

(c.(X))

.

i ^ i i -v i %

An

elaborate treatment

of the

penalty function algorithm

can be

found

in

(Fl), (L5)

and

(Zl)

for a

general nonlinear problem.

The

basic algorithm

may

be

represented

as

follows:

(k)

(i)

Select

an

infinite sequence

{a }

which

is

monotonically

increasing

as k

-»•

°°.

Find

X i F>

where

F is the

feasible

a.

region defined

by the

constraint functions.

Set k = 0.

(ii)

Set k = k + 1.

(iii)

Minimize

P(X,

a^')

to

find

X(a^)

= X^'

starting

the

f\j

r

O 'Y*

(k-1)

minimization from

X .

Return

to

(ii)

if

convergence

is

not

satisfied.

Convergence tests

in

step

(iii)

are

usually based

on the

magnitude

of

quantities such

as

(f(X

(k)

)

-

f(X

(k

~

1}

))

and ||

X

(k)

-

X

(k

~

1)

||

where

|| X ||

is

the

Euclidean norm

of the

vector

X.

Other convergence criteria

are

discussed

by

Fiacco

and

McCormick'(Fl).

It is

assumed

that

the

function

(k)

f(X)

is

bounded below

so

that

a

solution

X to the

unconstrained minimization

*\, 'Xf

(k)

in

step

(iii)

exists

for

each

a . In

step

(i) the

initial starting point

X^

' is

outside

the

feasible region

F and the

trajectory corresponding

to

<\j

the

sequence

{X . }

generated

by the

algorithm lies outside

F.

Therefore

•v

penalty function methods

are

also

known

as

exterior-point methods.

Any

(k)

limit

point

of the

sequence

{X }

generated

by the

penalty method

is a

solution

X* to the

constrained minimization problem

PI

(H4), (L5), (Zl).

The

penalty function technique might

be

regarded

as a

"primal"

approach

to

implicitly account

for the

constraints, although

its

connections with

duality

are

known

(Fl), (L5), (Zl).

The

approximation

of the

constrained

problem

by the

unconstrained penalty problem becomes more

and

more exact

as

the

control parameter

a -> °°.

However considerable computational difficulties

are

experienced with

the

traditional penalty function algorithm

as o -> °°.

These difficulties

are

delineated

in

detail

in

references (L3), (L5), (M5),

(Rll).

The

computational difficulties arise from P(X,

a)

forming

an

increasingly

'v

steep-sided

valley

as the

control parameter

is

increased

to

allow

the

unconstrained solutions

to

approach

the

constrained solution

to Pi

from

outside

the

active constraints.

In

particular,

the

Hessian

matrix

of the

penalty

function

[1]

becomes extremely ill-conditioned

as a

increases.

This leads

to

numerical

instabilities during unconstrained minimizations

of

the

penalty function

and

slow

convergence

of the

algorithm.

Attempts

to

overcome these computational difficulties have resulted

in

several modifications (Fl), (F2), (L3)

to the

penalty function technique.

Hestenes (H2)

and

Powell

(P3),

at

about

the

same time, independently

proposed

modifications that resulted

in a new

method related

to the

penalty

function

technique.

In

this

new

-method

penalty terms

are

added

to the

Lagrangian associated with

the

original

constrained problem. Hestenes (H2)

termed

this

the

"Multiplier Method".

It has

become

known

as the

Augmented

Lagrangian Penalty Function Technique

in

subsequent discussions. This

method

alleviated some

of the

computational difficulties associated with

the

traditional penalty function technique (F8)

and

achieved

better

convergence properties than

the

method

of

penalty functions (HA).

This

method

is

reviewed briefly

in

Chapter

3.

2.2

Lagrangian

Primal-Dual

Method

The

Lagrangian primal-dual method transforms

a

constrained convex

programming

problem into

a

sequence

of

unconstrained minimizations

of the

classical Lagrangian associated with

the

constrained

minimization

problem.

The

constrained problem

PI

becomes

a

convex programming problem when

the

objective

function f(X)

is

convex

and the

constraints c.(X)

i = 1, 2,

...,

m

a.

la.

are

concave.

The

concept

of the

primal-dual method

was

first implemented

by

Arrow,

et al.

(Al)

in the

differential gradient scheme

for

approaching

the

saddle-point

of the

Lagrangian L(X,

X)

associated with

a

convex program.

Oi "(j

The

Lagrangian associated with

the

convex problem

PI may be

represented

as

m _

L(X,

X) = f (X) - E X.

c.(X),

X £ L %• E

n

, X e E . [2]

'X*

*\j fYi • i 1 •*- 'Xf 'Xl *\» •

-v

1=

j_

m

m

where

E is the

nonnegative orthant

of

m-dimensional

Euclidean

space

E

m

and

the

vector

X e E, is

called

a

vector

of

multipliers.

Suppose

that

a

point

X*

satisfies

the

constraints

of the

convex program

PI and the

problem functions

are of

class

C . If

there exists

a

vector

X*

such that

X*

> 0, X.*

c.(X*)

= 0 V i and

VL(X*,

X*) =0, [3]

— i i a. a, <\, i\,

then

X* is a

global solution

to the

convex program

PI. The

vector

X* is

a.

^

said

to be the

vector

of

Lagrange multipliers associated with

X*. If the

.

- a.

gradients

of the

active constraints

at X* are

linearly independent, then

X*

<\i

• a.

is

a

regular point

of the

feasible region

F and

there exists

a

vector

of

Lagrange multipliers

X*

satisfying [3].

The

conditions

in [3] are

called

a.

the

Kuhn-Tucker first-order necessary conditions

for X* to be a

solution

to

1Q

PI and for the

convex program

PI,

these

are

also sufficient conditions

for

X* to be a

global solution.

For a

nondifferentiable convex problem

PI let

<\j

n

m

there

exist

an X* e E and a X* e E.

such

that

the

pair (X*,

X*) is the

^ >\j ^ ^

saddle-point

of the

Lagrangian L(X,

X)

-associated

with

the

convex program

* . 'x. a.- .

PI,

i.e., L(X*,

X) <

L(X*,

X*) <

L(X, X*).

Then

X* is the

global solution

r\,

r\j ~ ^ ^ —

1>

r

l>

. r\,

to

the

convex program

PI and X* is the

vector

of

Lagrange multipliers

a.

associated

with

X*.

oj

The

differential

gradient scheme

of

Arrow,

et al.

(Al)

for a

convex

program

may be

viewed

as a

small-step

primal-dual

method

where estimates

of

X* and X* are

modified

at

each iteration

to

exploit

the

saddle-point

'Xi

Oi

nature

of

L(X,

X)

near (X*, X*). This structure

of the

method

is

revealed

f\,

<\, f\j ^ • .

by

the

system

of

difference equations formulated

by

Uzawa (Al)

to

represent

the

differential gradient method. Davis (Dl) represents

the

iterations

in

this method

as

B-LCX,

x

(k)

)

r\,

1 1 <Vi 'Vi 'Vi

(k+1)

_ (k) -1 (k) (k)

X

= mm ID, X - a. o

VXL(.X

, X J

where

a and a are

scalars

representing step-size, VL(X,

X) is the

gradient

X 2. . <\( <\, o>

of

L(X,

X)

with respect

to X,

VXL(X,

X) is the

gradient

of

L(X,

X)

with

'X-

% f\j

'X/X< •'X;

'X. 'X/ 'Xi

respect

to X and B and B are

positive definite matrices

of

order

n and

r\j

1 i

(0)

(0)

m

m

respectively.

The

algorithm

may be

started

at any X

v

' e F and X e E,.

'X, »\» "*"

As

the

constrained problem

in the

above method

is

convex,

the

Lagrangian L(X,

X) is

also convex with respect

to X. The

iterations

on

'x/

a. <\/

(k)

X ,

therefore,

are

descent iterations

on

L(X,

X) and

update

of

multipliers

r\j 'Xl "Xl

11

X

may be

viewed

as

ascent iterations

on

L(X,

X). The X

update

may

O.

i\, r\, %

also

be

regarded

as

approximate solutions

to the

associated dual problem

(k)

at

X^ . The

dual associated

with

PI is .

<\,

m

Dl":

Maximize

l/(X)

over

all X e E

a.

^>

+

l/(X)

=

infimum L(X,

X) , X e L .

'Xi

'V 'Vi *X»

The

Lagrangian L(X,

X) is

minimized over

X e L for a

sequence

of

multiplier

"(j

Oi TJ

(k)

vectors

X and the

algorithm

is a

primal-dual method. Methods that

are

a.

similar

in

concept

to

this algorithm

are

described

by

Powell (PA)

,

Bertsekas

(B4)

, and

Lasden (LI)

.

The

algorithms based

on

Lagrangian primal-dual method

are not

susceptible

to

numerical

instabilities

such

as

those

discussed

in

connection

with

the

penalty method. Primal-dual methods

are

based

on the

viewpoint

that

the

Lagrange multipliers

X* are

also fundamental

unknowns

associated with

a

%

constrained problem. This

is due to the

reason

that

Lagrange multipliers

measure sensitivities

and

often have meaningful interpretations

as

prices

associated with constraint resources (H4)

,

(L5). Useful duality results

for

convex programs have been presented

by

Luenberger (L5)

and

Zangwill (Zl)

.

Various formulations

of the

duality theory

for

nonlinear convex programs

using

the

classical

Lagrangian have been reworked

and

extended

by

Geoffrion

(Gl)

so as to

facilitate, more readily, computational

and

theoretical

applications.

Methods based

on the

classical

Lagrangian

for

solving

a

constrained

problem

PI

have been reviewed

by

Lootsma (L3)

.

The

Lagrangian primal-dual method

is

known

to

have serious disadvantages

(R3)

,

(R6)

. The

most restrictive

one is

that

the

constrained problem must

12

be

convex

In

order

for the

dual

problem

to be

well

defined

and X

iterations

<\>

to

be

meaningful.

In

general

inf

(PI)

> sup

(Dl)

and the

equality holds

good

only

for the

convex problem

PI. For

nonconvex problems only

the

inequality

hcvlds

in the

above relationship

and in

such cases

a

duality

gap

is

said

to

exist.

For

nonconvex problems Everett (E2) introduced

a

primal

dual

method called generalized Lagrange multiplier method. This

and

other

associated methods

are

summarized

by

Lootsma (L3). Even though Everett (E2)

suggested

some methods

of

handling

the

duality gap,

the

method

has

been

found

to be

useful only

for

certain

nonlinear

problems with special structure.

The

method

is of

importance

in the

decompoisition

of

large-scale problems

with separable functions.

In

such cases minimization

of the

Lagrangian

can .

be

carried

out

efficiently

due to the

special structure

of the

constrained

problem

(E2), (LI), (L5).

For

a

convex program,

if X* is the

optimal solution

to the

constrained

"u

problem

with corresponding Lagrange multiplier vector

X*,

then

X* is the

>\j

<\,

unconstrained minimizer

of

L(X, X*). However,

if X* is a

local solution

to

a.

a. . ^

a

nonconvex program with corresponding Lagrange multiplier vector

X*,

then

^

X* may not be the

unconstrained local minimizer

of

L(X,

X*) and

L(X,

X*) may

^ r\j ^ ^ Oi

even

have negative second derivatives

at X* in

certain directions normal

Oi

to

the

feasible manifold

F

(R3). Since curvature

at a

point

is

determined

by

the

second partial derivatives, attempts were made

to

make

the

Lagrangian

associated with nonconvex programs

a

convex function

by

adding quadratic

penalty

terms

to it.

This concept

was

first suggested

by

Arrow

and

Solow (Al)

in

connection with

the

solution

of a

nonconvex equality constrained problem

using

the

differential gradient method. Arrow

and

Solow augmented

the

classical Lagrangian with quadratic penalty terms

and

this

'elegant idea

13

made

the new

augmented Lagrangian locally convex. This idea

was

independently

reconsidered

in an

entirely different algorithmic context

for

equality

constrained problems

by

Hestenes (H2),

Powell

(P3)

and

Haarhoff

and

Buys (Hi).

The

algorithms

that

resulted from these efforts belong

to the

Augmented

Lagrangian Penalty Function Technique which

is

reviewed

in

Section

3.

14

III. AUGMENTED LAGRANGIAN PENALTY FUNCTION TECHNIQUE

3.1

Introduction

The

ALA'G

penalty function technique

may be

reviewed from

two

entirely

different

points

of

view.

The

first view-point

is

that

the

methods that

belong

to

this technique modify

the

Lagrangian associated with

a

nonconvex

or

a

weakly

convex constrained problem

to

have

a

local convexity property.

This

is

because

the

characterization

of

solution

to a

constrained problem

in

terms

of a

saddle-point

of the

Lagrangian depends heavily

on

convexity

properties

of the

underlying problem.

The

local saddle-point property

is

obtained

by the

presence

of a

convexifying parameter

in the

Lagrangian

which

makes

the

associated

Hessian

positive definite

for

large enough,

but

•finite,

values

of the

parameter.

Following

this idea

of

local

convexification

many

different modifications

of the

classical

Lagrangian have been proposed

to

close

the

duality

gap in

nonconvex programming (Al), (A2), (M2).

The

second viewpoint

is to

consider

the

technique

and the

quadratic

penalty

function method

within

a

common generalized penalty function frame-

work.

The

approach here

is to

circumvent instabilities associated with

the

classical

penalty function method

by

adding penalty terms

to the

Lagrangian

function.

The

advantages

of

using

a

first-order penalty furnction have

been

listed

by

Lootsma (L3)

and

McCormick (M5)

.

Therefore methods that

augment

the

Lagrangian with quadratic penalty terms

are

considered

in

detail.

The

development

of the

ALAG penalty function technique

is

traced from

the

second

viewpoint.

15

3.2

Review

of the

Technique

for

Equality Constrained Problem

3.2.1 Equality Constrained Problem

The

equality constrained problem

P2 may be

represented

as

follows:

•

P2:

Minimize f(X)

^

Subject

to

c.(X)

=0 i = 1, 2,

...,

m m < n

1

^ ~ • '

(2)

f(X)

and

c.(X)

i = 1, 2,

...,

m are

real-valued functions

of

class

C

defined

on a

nonempty open

set L SL. E . The

Lagrangian associated with

P2

is

m

L(X,

X) =

f(X)

- Z X, c

(X),

X e

E

m

..

[4]

l

\t

*\j

f

\j i 11 f\j f\.

±=1

The

gradient

and

Hessian

of

this

Lagrangian

with respect

to X are

VL(X,

X)

2

and

V

L(X,

X)

respectively.

*\/

f^j

Let

X* be an

optimal solution

to P2 and the

problem functions f(X)

(2)

and

c.(X),

i = 1, 2,

...,

m be of

class

C in an

open neighborhood

of X*.

The

following

are

assumed

to

hold

good

at X*.

(i) The

point

X* is a

regular point

of the

feasible

set

"u

F

= {X:

c.(X)

= 0 i = 1, 2,

...,

m, X e L S E

n

}

Let

N* =

N(X*)

be the nxm

matrix [Vc,, Vc

0

, ...,

Vc ].

r\j

i\j J. >\, Z ^ m

The

regularity

of the

feasible

set at X* is

satisfied when

N* is of

full

rank.

(ii) There exists

an

unique Lagrange multiplier vector

X*

such

that

the

following first-order necessary conditions

for

local optimality

at X* are

satisfied.

X*

e E

m

,

c.(X*)

= 0 Vi and

VL(X*.

X*) = 0. . [5]

f

\j

1 'V;

/

\J *X> *\>

16

(iii)

The

second-order

necessary

conditions

for

local

optimality

at

X* are

that

in

addition

to [5]

^

Y

T

V

2

L(X*,

X*) Y > 0 . V Y e V^ E" [6]

^ 'Xi % Oj — ^

V

= {Y: Y

T

Vc. = 0 Vi}

>\>

"u O, 1

(iv)

The

second-order sufficient conditions

for X* to be an

isolated

"c

local minimum

are

that

in

addition

to [5]

Y

T

V

2

L(X*,

X*) Y > 0 V

nonzero

Y e V [7]

^ O> "\j <\, ^ •

(v)

Strict complementarity holds

at X*,

i.e.

, X.* ^ 0 Vi

it 1-

3.2.2

Powell

-

Hestenes Augmented Penalty Function

Powell

(P3) suggested

the

following penalty function

to

solve

P2.

,

m

2

<Kx,

e, s) =

f(x)

+± z

o.(c.(x)

- e.)

*\i

<\i r\j 2. -I 1 1 O> !

=

f(X)

+ \

(c(X)

-

6)

T

S (c(X)

- 6) [8]

where

9 e E

m

,

C(X)

is a

vector

of

constraint functions

c . (X) i = 1, 2,

...,

m

o>

'Vi 'v • ' i *\<

and

S is a

diagonal matrix

of

order

m

with,

diagonal elements

a. > 0. Let

a e E be a

vector with

a. as

components. While

the

classical penalty

/\,

"*•*"

i

function

for P2

contains

at

most

m

control parameters,

the

above function

depends

on 2m

parameters which

are the

components

of 6 and a. The

main

f\j

i\,

difference between

classical

quadratic penalty function

and [8] is the

introduction

of

parameters

9. In [8]

quadratic penalty terms have been

<Vi

added

to the

Lagrangian associated with

P2.

The

augmented penalty function

(j>

is

used

in the

algorithm

as

follows.

17

Algorithm

Al:

(i)

Select 9

(1)

=0.,

k = 0,

S

(1)

= I and

X

(0)

i F.

o> >\j

(ii)

k = k + 1

(iii)

Minimize

<J>(X,

9

(k)

,

S

(k)

)

to

find

=

X(6 , S )

starting

the

unconstrained

*\i

^

minimization

from

X . .

>\,

(k)

(iv)

If C(X ) is

converging sufficiently rapidly

to

zero then

e

(k+l?

=

6

(k)

'Vl

^

=

s

(k)

and

return

to

otherwise

(i/io)

e

(k)

^

1Q

g

(k)

and

return

to

•In

step

(ii)

<|>

is

minimized with respect

to X

without constraints

for

fixed

<v/

(k) (k)

values

of 6 and S and

this

is the

inner iteration

of the

algorithm.

^

(k) (k)

Step

(iii)

is the

outer iteration

in

which

6 and S are

changed

to

%

(k)

force

constraint satisfaction

and

cause

the

sequence

of

solutions

{X }

^

to

converge

to X* at a

reasonably fast rate.

^

The

scheme

for

adjusting

0

parameters

in the

outer iteration

is

(k)

based

on the

observation

that

if

X

vr

"'

is the

minimizer

of

<j>(X,

9

V

~' , S

*v/

a. ^

(k)

in

the

inner

iteration, then

X is

also

a

solution

of the

problem

Minimize

f(X)

X e L <= E

n

Subject

to

C(X)

=

In

order

to

solve

the

equality constrained problem

P2 it is

sufficient

to

find

6

(k)

and

S

(k)

such

that

X

(k)

=

X(9

(k)

,

S

(k)

) solves

the

system

of

'Vi

f\, *\i 1*

nonlinear equations

18

C(X(6

(k)

,

S)) - 0. • [9]

The

above system

of

equations

is in

terms

of 2m

parameters

8 and a

i

i

(k) (k)

i = 1, 2, . . . , m. One

vector

of

parameters

0 or. a may be

fixed

and

[9]

then

is a*

system

of m

equations

in m

remaining parameters.

(k)

If

6 is

fixed, then

[8]

reduces

to a

basic penalty transformation.

f\j

Specifically when

0

parameters

are set to

zero,

$

becomes

the

classical

<\j

quadratic

penalty function.

In

such

a

case convergence

of the

sequence

(k)

{X } to X* is

ensured

by

letting

a.

-*•

°°, i = 1, 2 . . . , ra.

This leads

a-

'v i

to

numerical instabilities

and

slow convergence. Therefore

in

Powell's

(k) (k)

method

S is

held constant

and 0 is

changed

to

force constraint

<\,

satisfaction through iterative solution

of

[9].

Powell

(P2)

derived

a

(k) (k)

simple correction

for

adjusting

0

parameters when

S is

fixed

by

'V

•applying

generalized Newton iteration

to

solve

[9].

This correction

is

represented

as

(k)

'

(k)

(k) (k)

By

definition

X is the

unconstrained minimizer

of

<j>(X,

6 , S ).

Oj "\> O;

Therefore

V4>(X

(k)

,

0

(k)

,

S

(k)

)

= 0,

i.e.,

Vf(X

(k)

)

+ I

a.(C.(X

(k)

)

-

0.

(k)

)

VC.(X

(k)

)

= 0.

[11]

Continuity

of

C(X)

in the

neighborhood

of the

local minimizer

X* of P2

<X>

'Xi • 'Vi

(\r\ df-V

implies

that

the

matrix

N(X

V

') is of

full rank

for X

v

'

sufficiently close

/U\

to

X*.

When

X

v

' is in the

neighborhood

of X* and

when

X

v

'

->-

X* the

estimates

X

(k)

=-S

(k)

(C(X

(k)

)

-0)

[12]

i\,

19

exist

and

have

as

limit points

the

unique values

X* = S* 9*,

where

9* and S*

are the

parameters corresponding

to X*.

Hence

the

final value

of the

product

S 9, in the

limiting sense,

is a

constant

and may

considered

to be

independent

of S

when

S is

fixed

and 9 is

adjusted

to let 9

-»-

6*. Due to

• <\, "" <\,

this

reason, when

S is

increased

in

step (iii)

of

algorithm

Al to

improve

the

rate

of

convergence

of the

sequence

{max|c.(X

. • )|) to 0 and {X } to

,

i ^

X*, 6 is

decreased

to

keep

S 9 a

constant.

Convergence

of the

algorithm

is

measured using

the

sequence

{max|c.(X

)|),

Under

the

assumptions

in

3.2.1

and

when

the

Hessian matrix

of

<}>

is

positive

definite

at X*,

Powell

(P2) proved

that

the

rate

of

.convergence

is

linear

and

the

convergence ratio depends

on

I/a.

for a. > a

1

. The

threshold value

a' is a

large

but

finite positive real number. Therefore

by

choosing

S to

be

large

so

that

S is

close

to S',

where

S

1

=

a'I,

the

algorithm

can be

made

to

have linear convergence

at any

arbitrary rate. Superlinear convergence

is

achieved when

a. -> °°. In

Powell's

algorithm

the

rate

of

convergence

is

taken

to be

satisfactory when

the

maximum residual,

max|c.(X

)|, of the

system

of

equations

[9] is

reduced

by a

factor

of

four

on

each iteration.

The

reason

for

preferring

the

slower rate

of

convergence implied

by the use

of

factor four

is

that faster convergence tends

to

make

the

inner iterations

(k)

more difficult (P2).

When

the

sequence

{max|c.(X

)|)

either fails

to

converge

or

converges

to

zero

at too

slow

a

rate,

S is

increased

by a

factor

of

ten.

The

choice

of

factor

ten to

increase

S is

arbitrary.

Numerical evidence indicates

that

the

value

of a is

seldom required

to

2

be

greater than

10 to

ensure rapid convergence

(Rll).

The

Hessian matrix

of 4>

depends

on

both

9 and S. The

change

in

this

matrix

is

dominated

by the

increase

in S

(P2). This

is

another reason

20

for

using

a

factor

of ten to

increase

S

when

the

rate

of

convergence

is

slow

and

keeping

S

constant when rate

of

convergence

is

satisfactory.

If

S is

chosen

to be

large

in the

initial iteration, instead

of

gradually

increasing

S, the

Hessian

of

<|>

becomes ill-conditioned

and the

unconstrained

•

minimization

of

<J>

in the

inner iteration becomes very difficult

to

perform.

Further

for a

large

S, an

arbitrary starting point

X ' and

arbitrary values

'Vi

(k)

of

0

parameters,

the

sequence

(X } may not

converge

to X*.

Therefore

S

a, . • r\, <^

(k) (k)

is

increased

so as to

force

X

into

a

region

in

which

sequence

{X }

^ <\,

locally converges

to X*.

Once this region

is

reached,

S is

kept constant

(k)

and

6

parameters

are

adjusted

so as to let X

-»•

X*.

Further

the

gradual

<\,

<x, %

increase

of S is

designed

to

make

<f>

continuous

and

continuously

dif

ferentiable

with respect

to X for all

values

of the

parameters.

In

Powell's

algorithm

the

minimizations

in the

inner iteration

are not

beset

by

computational

difficulties associated with

the

basic penalty function transformations.

The

minimizations

are

well

scaled

and

progressively less computational

(k)

effort

is

required

as k

increases

and X

->•

X*.

1j "Vi

Hestenes (H2)

,

independently

of

Powell

and at

about

the

same time,

proposed

a

similar method

for

solving

P2 and he

called

it the

method

of

multipliers.

The

method

is

based

on the

observation

that

if X* is a

^

nonsingular minimum

of P2,

there exists

a

multiplier vector

X* and a

constant

Oj

a

such that

X*

affords

an

unconstrained local minimum

to the

function

T(X,

X*, S) =

f(X)

- X*

T

C(X)

+ 1/2

(C(X))

T

SC(X)

.

[13]

'V.'V.

O>'X<f\,'Vi

Of'X.'V'Xi

where

S = ol.

Conversely,

if

C(X*)

= 0 and X*

affords

a

minimum

to

[13],

^ 'Vi 'Vi

then

X*

affords

a

minimum

to P2. In the

method

of

multipliers

a

large

21

positive

constant

a is

suitably chosen

and is

held fast.

The

augmented

penalty function considered

is

T(X,.X,

S) =

f(X)

- X

T

C(X)

+ 1/2

(C(X))

T

SC(X) [14]

i

r r i

where

X e B and 8 is an

arbitrary compact subset

of E . The

function

in

/

v>

[14]

is

sequentially minimized

for

successive estimates

X of the

unique

a. .

Lagrange multiplier vector

X* at X*.

"Xi

^i

(k) (k)

The

unconstrained minimization

of

T(X,

X , S) for an

estimate

X

r\,

<\, %

(k) (k)

of

X* is the

inner iteration.

Let X = X(X , S) be an

unconstrained

^ -v i,

(k) (k)

minimizer

of

T(X,

X , S) . In the

outer iteration

the

estimate

X is

a.

a/ ^

(k)

updated

so as to

cause

X -> X*.

Hestenes suggested

the

following

formula

'V

O;

(k)

for

adjusting

the

multiplier vector

X

a.

where

S = o I, 0 < a <. a, a = yo and 0 < y < 1- The

relation

(k)

[15]

is

derived from

the

observation

that

X is a

local minimizer

of

-v

(k)

(k+1)

T(X,

X , S) and X is

chosen

so

that first order necessary conditions

^ <\, ^ .

(k)

are

satisfied

at X for P2.

Hestenes

(H2)

did not

analyze

the

convergence

of

the

method,

but

subsequently (H4) established that

the

method converges

linearly

and

superlinear convergence

may be

achieved when

a

-»•

°°. In

practical

applications very fast linear convergence occurs

for a

large

but

finite value

of o.

Convergence

is

induced

by not

only

a

large value

of

a but

also

by

multiplier iteration [15] (F8)

.

In

Powell's

method when

S is

fixed

and 6

parameters

are

adjusted

to

'V

(k)

let

X

->-

X*, the

unique Lagrange multiplier vector

X* = S 6*,

where

6*

22

corresponds

to the

vector

of

parameters

at X*.

This implies

that

a

'Xi

connection

can be

established between

the

augmented function

<f>

in [8] and

T in

[14]

using

the

relationship

i

V

i =

1> 2>

'"'

m

'

From

[8], [14]

and

[16],

1

m

2

<KX,

0, S) =

T(X,

X, S) +± Z \

.la.. [17].

r\j

<\, >\j f\j L

._•]

J- 1

The

difference between

<f>

and T is

independent

of X. If

X(6,

S) and

X(X,

S)

'X-

<V; O. 'X/ O>

are

unconstrained

minimizers

of

<j>

and T

respectively

for any S and if 6 and

a.

X

are

related

as in

[16],

then

X(6,

S) =

X(X,

S).

Therefore

the

iterative

<\,

fx, Oi *\J i\,

-methods

suggested

by

Powell

and

Hestenes

for

changing

9 and X

parameters

'Vi

'Xi

are the

same.

In

view

of the

equivalence relationship

[17]

between

cf>

and T, the

numerical algorithm

Al is

discussed

in

terms

of the

augmented penalty

function

T. - In the

outer iteration adjustment

of X

parameters using

[15]

%

is

considered, assuming

that

6 and X are

related

by

[16].

The

algorithm

f\, 'Xi

Al is

discussed

and

analyzed using

X

parameters

to

emphasize

the

primal-dual

<\j

(k)

nature

of the

method which iterates with

an

approximation

X to the

'V,

(k)

Lagrange multipliers

X* in

such

a way as to

make

X •*• \*.

'Xi

^

The

algorithm

Al is now

modified

and

denoted

as the

Powell-Hestenes

augmented

penalty function algorithm

A2. The

convergence

of the

algorithm

is

measured

in

terms

of B =

max|c.(X)|.

23

Algorithm

A2:

(i)

Select

X

(1)

=

X

(0)

, S

(1)

=

S

(0)

,

k = 0,

arbitrary starting

point

X

(0)

and

B

(1)

= B_

where

B

0

>

max!c.(X

(0)

)I.

'v.

0 0 = . ' i

<\,

'

i

(ii)

k = k + 1

(iii)

Minimize

T(X,

X

(k)

, S

(k)

)

to

find

X

(k)

=

X(X

(k)

,

S

(k)

)

(k-1)

starting

the

unconstrained minimization from

X

a.

(iv) Find

V = {i:

|c

±

(X

(k)

)|

>

B

(k)

/4}.

If

max|c

(X

(k)

)|

>

B

(k)

,

set

B

(k+1)

-

B

(k)

.

Go to

(vii).

•

1 *\; ^

1

(v)

B

(k+1)

=

max|c

i

(X

(k)

)|.

If

B

(k+1)

< E

stop.

The E is a

i

specified

tolerance

for B.

(vi)

If

B

(k+1

>

<

B

(k

V4

or

X

(k)

= X

0

^

*\»

^

set

X -

A<

k

>

-

S

(k)

C(X

(k)

)

,

go to

(ii).

(vii)

Set

X

(k+1

>

=

X

(k)

Ck+11

Ck} ^

yixi^j.y

i f*.

VR./ TT'•

TI

o.

- 10 o Vi e V,

i i

go

to

(ii).

When second order sufficiency conditions hold good

at X* for P2,

there exists

Oi

a a

1

> 0

such

that

for o. > a

1

Vi, the

Hessian matrix

of

both

(j>(X,

6*, S)

and

T(X,

X*, S) at X* is

positive definite

and X* is a

strong local minimum

of

<j.(X,

6*, S) and

T(X,

X*, S)

(B2), (B7),

(F8),

(H2)

. It

should

be

noted

'V

^ 'V/

that

the

local convexity

of

<j>(X,

9*, S) and

T(X,

X*, S)

near

X* is

established

without

any

assumptions about

the

convexity

of

problem

P2. The aim of the

(k) (k)

algorithm

A2 is to

.keep

S

constant

and

adjust

X so as to

cause

X -*• X*.

f\j *V *X»

24

Therefore

in

subsequent discussions

it is

assumed

that

a. > a

1

Vi

have

been

chosen

and

held fast

so

that

(j>

and T are

locally convex.

Due to

this reason

the

explicit dependence

of X on S is

dropped

and

X(X,

S) is

represented

as

f\j

^ 'V

X(X).

%

<\,

•

Haarhoff

and

Buys (HI) proposed

a

numerical algorithm very similar

to

the

Powell-Hestenes method. They were motivated

by the

following observations

about

the

traditional quadratic penalty function approach

to

solve

P2. Let

the

quadratic penalty

function

for P2 be

m

2

P(X,

a) =

f(X)

+ a Z

(c.(X))

, a > 0.

Let

X(a)

be an

unconstrained

minimizer

of

P(X,

a) for a

large value

of

'o r\j

control

parameter

a and X* be a

local minimizer

of P2. The

gradient

of

<v

P(X,

a) is

zero

at

X(a)

but the

gradient

at X* is

Vf(X*).

Therefore,

in the

'Y/

f\, . 'V . i\, O.

usual case when

Vf(X*)

is

nonzero, X(a)

and X*

have

to be

different.

Let X

'V

^ ^ ^ ^

be

a

solution

to the

under-determined system

of

equations C(X)

=0. At X

a. <\j a.

the

gradient

of

P(X,

a) is

Vf(X) which

is

generally,

not

zero. Therefore

X

>\j

Oi "Xi ^J

and

X(a)

are

different

and for any

finite value

of a,

X(o)

is

neither

a

"u

. ^

solution

to P2 nor

satisfies C(X)

= 0.

Usually X(o) tends

to X*

when

a -> °°

a/ 'v/ *v> a.

(L5), (Zl). From these observations Haarhoff

and

Buys added

a

linear

combination

of

constraints

to

P(X,

a) to

obtain

a.

T(X,

X, S) - f (X) - A

T

C(X)

+ ^

(C(X))

T

SC(X),

S =• ol

"

where

X e E and o > 0.

This function achieved their objective, i.e.,

a.

balanced

the

gradient

of

f(X)

in the

vicinity

of the

minimum

by a

linear

^

combination

of

gradients

of

constraint functions C(X).

r\, <\j

25

The

augmented penalty function proposed

by

Haarhoff

and

Buys

is .

identical

to the

Powell-Hestenes

augmented penalty function

for P2.

However

.

the

numerical algorithm

of

Haarhoff

and

Buys

has

some distinct features.

They noted

that

the

multiplier updates

[15]

are

valid only

when

the

function

(k)

(k)

T(X,

X , S) is

minimized exactly

for

each

X and

that

it is

better

to

*V *\j 'X*

(k)

terminate

the

inner iterations when

a

better value

of

T(X,

X , S) is

obtained. They suggested that

the

multipliers.in

the

outer iteration

be

obtained

from

the

first order necessary condition.

Vf(X

(k)

)

=

N(X

(k)

)

X, X e E

m

. .

[18]

The

condition

[18]

represents

an

over-determined system

of n

equations

in m

(k)

parameters.

Taking

the

scalar

product

of

[18]

with each VC.(X

), the

<\,

i a,

following

system

of

equations

is

obtained.

N

T

(X

(k)

)

Vf(X

(k)

)

=

N

T

(X

(k)

) N(X

(k)

)

X, X e E

m

.

[19]

^ <\, ^ ^ a. a. a.

The

expression

in

[19]

represents

a

system

of m

equations

in m

parameters

X

that

may

easily

be

solved

for X.

This,

in

effect,

is a

least squares'

'V

^

solution

to

[18].

The

vector

of

multipliers

X is an

estimate

of the

unique

a/

Lagrange multiplier vector

X* at X* and X

tends

to A*.

% >\j -V ^

Haarhoff

and

Buys were more concerned with computational considerations

than

with convergence

or

duality aspects

of the

algorithm.

They

suggested

that

the

problem functions

be

scaled

so

that

the

gradients

are of the

same

magnitude

and o. be on the

order

of

ten.

In

this algorithm

a. i = 1, 2,

...,

m

are

kept constant

and in the

inner iteration

the

variable metric method

of

26

fir)

Davidon-Fletcher-Powell (DFP)

is

used

to

minimize

T(X,

X • ., S). The

^ ^

2-1

approximation

to [V T] is

updated using

the DFP

update formula

(Mil).

A

restoration

step

is

included

in the

inner

iteration

and in

this step

T

is

minimized without using derivatives

in a

direction

that

leads

to the

satisfaction

of

linearized constraints. Other numerical aspects

of the

algorithm,

such

as the

various stopping criteria

for

inner

and

outer

2

-1

iterations

and

updating

the

approximation

to

inverse Hessian

[V T] are

discussed

in

reference (HI).

The

elegant idea

of

local convexification

of the

Lagrangian

was

first

introduced

by

Arrow

and

Solow (Al). They suggested addition

of

quadratic

penalty

terms

to the

classical Lagrangian

to

arrive

at a

modified Lagrangian

that

was

locally convex. They were motivated

by

adaptation

of the

differential gradient scheme, developed

by

Arrow,

et al.

(Al)

for

approaching

saddle points

of

convex programs,

to

nonconvex programs. Their

differential

gradient method

is a

small step-size algorithm while those

of

Hestenes, Powell

and

Haarhoff

and

Buys

are

large step-size methods.

In the

above contributions

to the

augmented penalty function technique

duality

concepts were

not

employed. Primal-dual interpretation

of the

technique

was

analyzed

by

Buys (B7), Luenberger (L5), Rockafellar (R12)

and

Bertsekas (B2), (B3).

A

detailed review

of the

duality results

may

be

found

in

reference (F8).

The

duality results

are

summarized briefly

in the

next section.

3.3

Review

of the

Technique

for a

Constrained Problem with Equalities

and

Inequalities

3.3.1 Constrained Problem

The

problem

P3

with equality

and

inequality constraints

is

represented

as

27

P3:

Minimize

f(X),

'X e L £: E"

Subject

to

c.(X)

= 0 i = 1, 2,

...,

k

i

%

c.(X)

> 0 i =

k+1, ...,

m, 0 < k < n.

i <\, - = =

The

real valued functions

f(X)

and

c.(X)

Vi are

defined

on a

nonempty open

a,

x

•>

set

L£ E . Let X* be a

local optimal solution

to P3. The

problem functions

(2)

are

of

class

C on L and

specifically

in an

open neighborhood

of X*. The

Lagrangian associated with

P3 is

rp

m

L(X,

A) =

f(X)

- A

C(X),

A e E , X e L £; E .

[20]

The

following conditions

are

assumed

to

hold good

at X*

(Fl), (M13).

(1) X* is a

regular point

of the

feasible region

a.

F

= (X: C. (X) = 0, 1 < i < k and

C.(X)

> 0, k < i < m}

Let

E = {i: 1 < i < k}

I = {i:

C.(X*)

=0, k < i < m}. The X* is a

regular point

i

<\,

• — ^

of

F

when

{VC.(X*)}

i e E £ I is a

linearly independent

set,

i\,

1 <\,

(2)

There exists

an

unique Lagrange multiplier vector

A* e E

such

that

the

Kuhn-Tucker

conditions

are

satisfied

at

(X*,

A*)

C.(X*)

= 0 i e E

C.(X*)

> 0 A.* > 0, A.*

C.(X*)

=0 i £ e

[21]

1 *\y — '1 — 3. 'Xj

VL(X*,

A*) = 0

'X/

f\j r\j

These

are

first-order necessary conditions

for

local optimality

at

X* and (X* A*) e E°

satisfying

[21]

is

termed

a

Kuhn-

'X,

'X. 'X.

Tucker point.

28

(3)

Second-order necessary conditions

for

local optimality

of X*

a.

are

that

in

addition

to

[21]

Y

T

V

2

L(X*.

A*) Y > 0 VY e /*

SrE

n

.

[22]

~

where

V*

= {Y: Y

T

VC.(X*)

= 0, i e E S I* and

'v

'v ^, i <\,

Y

T

VC.(X*)

> 0, i e

I-I*},

-

I is the

index

set of

active inequalities,

I* is the

index

set

of

strongly active inequalities

and I - I* is the

index

set of

weakley active constraints. However

the

following weaker

second-order necessary condition

is

usually assumed instead

of

[22] (Fl),

(Mil).

Y

T

V

2

L(X*,

A*) Y > 0 V Y e V£. E

n

[23]

%

%

'v a/ - %

V

= {Y: Y

T

VC.

(X*)

= 0, i e E

<~l}.

i\j

i\, r\j 1 'X,

(4)

Strict complementarity holds

at

(X*,

A*)

when

a. 'x.

A.*

j 0 for

each

1 < i < m for

which

C.(X*)

= 0.

[24]

1 = 1 'V,

A

weaker

form

of

[24]

is

A.*

> 0 and

C.(X*)

= 0, i e I.

[25]

i i -x,

(5)

Second-order sufficient conditions

for X* to be an

isolated

>\,

local minimum

are

that

in

addition

to

[21]

and

[23]

Y

T

V

2

(X*,

A*) Y > 0 V

nonzero

Ye/*. [26]

29

However

the

condition

[26]

is

usually replaced

by the

verifiable

condition

(Mil),

.Y

T

V

2

L(X*,

X*) Y > 0 V

nonzero

Y e V.

[27]

3.3.2 Powell

-

Hestenes

-

Rockafellar Penalty Function

The

augmented Lagrangian penalty function

for P3 is

obtained

by

combining

the

Powell-Hestenes penalty function

T and the

Rockafellar penalty

function

T. The

combined function

may be

represented

as

T

DU1

,(X,

X, a) =

f(X)

- E [X,

C.(X)

- ^ ° •

C,

2

(X)]

+

ieE

--•*,'--•*.

[28]

\ E [a (C (X) -)

2

- X

2

/a ]

2

where

X. X.

(C.(X)

- —) = min

[(C.CX)

- —), 0]

i o, a. la. a.

,-m

c

m

a e c,, , X e E .

"~"

In

[28],

the

factor

X./a.

represents

a

penalizing threshold

for the ith

inequality constraint.

The

multipliers

X. Vi are

unconstrained

and

this

is

an

useful property

of the

augmented penalty function

T .

Further

the

r

HK

function

T

possesses

a

number

of

strong properties

not

exhibited

by the

PHR

classical Lagrangian

L(X,

X). The

following properties

of T

D

make

it

r.

f\j r UK

ideal

for use in. a

primal-dual algorithm

for

solving

P3.

Let

M(X)

be the

index

set of the

inequalities

that

contribute

to

the

quadratic penalty term

in T for an

estimate,

X, of the

Lagrange

Jr

UK f\j

30

multiplier

vector

A*.

'V

•-M(X)

= {i: i i E,

C

±

(X)

< A

/oi}. [29]

Equivalently,,

M(A)

= {i: ± \. E, A. - a.

C.(X)

> 0}. .

[30]

•\»

1 1 1

*\/

At

the

local optimum (X*,

A*) of P3,

M(A*)

is the

index set,

I*, of the

strongly active inequalities.

By the

strict

complementarity assumption,

I = I* and

therefore M(A*) represents

the

active inequality constraints

at

the

local

optimum

(X*, A*). Further

the set

EUM(A)

represents

the

*\»

*\t ^

inactive inequality constraints

at the

intermediate approximation

(X, A) to

the

solution (X*, A*).

Let L = E

LTM(A).

Then,

'v <x, a.

L = {i: i i E.

C.(X)

>

A./a

}.

[31]

1^ — 11

Equivalently,

L

= {i: i i E, A. - a.

C.(X)

> 0}.

[32]

•vl

1 1

<X/

—

Using

the

above results

the

augmented penalty function

T may be

FHK.

represented

as

follows.

r_

UD

(X, A, a) =

f(X)

- E (A.

-~a.

C.(X))

C.(X)

FHK

'V-'X.'X.

-X.

j,T7

vr/->\

X

/ll'X,

1

ieE

M(A)

[33]

1

v

-. 2,

- Z A.

/a..

2 .

T

i i

31

The

representation

of T in

[33]

clearly illustrates

that

it is

obtained

rHK

by